BOINC over a cluster

Message boards : Number crunching : BOINC over a cluster

| Author | Message |

|---|---|

Dragokatzov DragokatzovSend message Joined: 5 Oct 05 Posts: 25 Credit: 2,446,376 RAC: 0 |

I see over at the Folding@home forums theres a sticky with LOTS of information on setting up a diskless stack. I was woundering would it be possible to rin BOINC with Rosetta on one of these? how many cpu's does BOINC support anyways? thanks for your information! Victory is the ONLY option! |

|

Honza Send message Joined: 18 Sep 05 Posts: 48 Credit: 173,517 RAC: 0 |

It is up to you how many CPUs can BOINC use Just go to the General preferences ( https://boinc.bakerlab.org/rosetta/prefs.php?subset=global ) and set "On multiprocessors, use at most ... processors" It should be fine with 8 if you get to a quad dual-core Opteron machine for example. When running a farm, I would suggest to use BOINCView ( http://boincview.amanheis.de/ ) to monitor/control machines. You can run BOINC over a network, from a Ramdrive or even from a flash disk [only CPDN is not suitable for this since it is quite HD space and I/O demanding]. |

Tern TernSend message Joined: 25 Oct 05 Posts: 576 Credit: 4,699,932 RAC: 0 |

One warning is that you need a separate installation of BOINC for each host, and each needs it's own access to the internet. If your "stack" appears to the OS as a single computer with multiple processors, all is well; if each boots individually, you have multiple "hosts", and you'll have to do a bit more work to make BOINC work correctly.

|

|

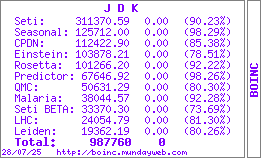

J D K Send message Joined: 23 Sep 05 Posts: 168 Credit: 101,266 RAC: 0 |

|

|

John McLeod VII Send message Joined: 17 Sep 05 Posts: 108 Credit: 195,137 RAC: 0 |

There is nothing that I have seen in the code that limits the number of CPUs. However, the communications between the BOINC daemon and the project applications is through shared memory - and AFAIK clusters do not do shared memory, thus requiring a separate installation for each host.   BOINC WIKI |

River~~ River~~Send message Joined: 15 Dec 05 Posts: 761 Credit: 285,578 RAC: 0 |

There is nothing that I have seen in the code that limits the number of CPUs. However, the communications between the BOINC daemon and the project applications is through shared memory - and AFAIK clusters do not do shared memory, thus requiring a separate installation for each host. In principle either a software hack (*) or a hardware hack could provide shared memory across a cluster. But nobody has done one as far as I know because you would not want to anyway. With many cpus sharing the same memory you reach a bottleneck where they are fighting one another for access to that memory. Even if memory speeds can be pushed a little, the speed of light limits how far the memory can be away from the chip at a given clock speed. If you had a 1GHz memory bus for example light would travel 1 foot (30cm) in that time, and because of the ask/fetch model that limits the memory to be within 6in (15cm). Or 4in (10cm) as the signals travel at 'only' 2/3 the speed of light. The consequence is that two real cpus will not give quite twice the crunch as a single cpu; by the time you get to four cpus in one box the effect should be noticeable even if they are real cpus in separate sockets. If you got to (say) 64 cpus the whole thing would be memory bound and adding more cpus would just slow it down. The cpus would spend more time haggling over memory access than doing real work. Well before we see the 64-cpu motherboard my guess is we will see motherboards with several groups of 2-4 cpus, each group having its own RAM... River~~ (*) the software hack is to associate a file with the shared memory area, and every member of the cluster use the same file. If the word 'performance' has just walked across your mind, you just independently figured out why you wouldn't want to do it... |

River~~ River~~Send message Joined: 15 Dec 05 Posts: 761 Credit: 285,578 RAC: 0 |

You can run BOINC over a network, from a Ramdrive or even from a flash disk. Ouch! Please don't use a flash disk directly. You only have a few times 10,000 rewrites on a flash disk before you wear out the memory. The flash mechanism is fine for photos and passing files from box to box, but not for uses where the file is repeatedly re-written. Remember it was developped from write once read many (WORM) memories used for BIOS, and so on. It is just not designed for this job. Reading a flash memory does not wear it out, writing does. If you want to do run from a flash drive, figure out how to make you flash disk copy itself onto a ramdisk before BOINC starts and copy itself back after it stops. This means of course that a power failure wipes out all work that hasn't been reported, as it is all on the ramdisk, but on Rosetta we're used to poor checkpointing anyway ;-(. Linux users might be able to achieve the same effect by setting 'async' in fstab - but check whether the kernel really does no writes till umount. Personally I would still want to do a copy to ramdisk before and after because I haven't looked at the kernel code. If you are running Rosetta it ls less of a problem than running Einstein - Rosetta only does about 10 checkpionts per wu, Einstein (Albert app) 1 checkpoint every few sec. Rosetta would take only a thousand wu to wear out the memory in your slot directories. That is three wu a day for a year. Albert could wear it out in a few dozen wu...

|

|

PCZ Send message Joined: 16 Sep 05 Posts: 26 Credit: 2,024,330 RAC: 0 |

What you have seen described as a discless stack are PXE boot nodes. I and many others use these. Basically you have one Server which runs DHCP, TFTP and NFS. The nodes are just basic MB's without any HD's. The nodes boot up over the network and load a kernel via TFTP. The OS is then mounted as an NFS share from the Server. There are other NFS shares setup for the various DC projects that you want to run. So the nodes have no HD's and all there files are on a central Server. However once booted they are individual entities, each node runs it's own copy of boinc. You control them via telnet or SSH. Each one of the discless nodes is infact a linux PC but without Screen or HD's. Folks use these clusters because the node cost is very low, just MB, RAM, CPU and PSU. Adding extra nodes only takes seconds, all that is required to add a node is a quick edit of dhcpd.conf to add the MAC address and some NFS exports for the new node. These PXE stacks are not true clusters as in they are not slaves to the central server, they are individual PC's using resources provided by a Server but not controlled by it. |

|

raukondil Send message Joined: 6 Jan 06 Posts: 4 Credit: 7,171,619 RAC: 3,728 |

did anyone have experience in using openmosix with boinc??? i've read sometimes in forums that this is not possible, but i'm not quite sure... would be happy to know if it is possible ... @DEV-team do i have to change the code of the boinc manager or just the project-code... answers are welcome greeting david |

Paul D. Buck Paul D. BuckSend message Joined: 17 Sep 05 Posts: 815 Credit: 1,812,737 RAC: 0 |

The current structure and design of BOINC does not support this concept. Long term there is some vague plan to maybe do something along these lines. But, the demand for this type of hosting is not really there. And, with BOINC running on a per-node basis it simulates a very loosly coupled cluster ... For example, I have 9 computers, all running BOINC and each computer manages its work but the main account records all the information from my "cluster". Also, I manage the group of computers with BOINC View as a "Cluster Console" ... So, without development work on BOINC it is not currently possible. The biggest stumbling block is the use of shared memory segments to send messages between the BOINC Daemon and the Science Applications. Changes would have to include modifications to the science applications also, or, a 'stub" Daemon with shared memory that would run on each cluster node linked to a BOINC Daemon/Manager on the cluster control using some other mechanism. Bottom line, probably not worth the effort as there is no particular gain to be had. |

dcdc dcdcSend message Joined: 3 Nov 05 Posts: 1834 Credit: 124,082,264 RAC: 23,137 |

You can run BOINC over a network, from a Ramdrive or even from a flash disk. JFI you can do this using the WinXP embedded (XPe) Enhanced Write Filter (by overwriting a couple of files from XPe to XP). There's loads of info on it at www.mp3car.com I'm working on one at the moment but having I/O problems with my CF IDE converter... |

River~~ River~~Send message Joined: 15 Dec 05 Posts: 761 Credit: 285,578 RAC: 0 |

What you have seen described as a discless stack are PXE boot nodes. Sounds interesting. Ive already got Linux going on my LAN, but so far with several separate boxes and fixed IP addresses. Can you recommend a good link to get me going in DCHP, TFTP, NFS & PXE, none of which I've used before? Or even better, would someone like to write an article for the Wiki on getting from a typical Linux distro up to a diskless stack of this kind? As well as learning the software, I also need to know stuff like how do I work out how much power is needed (same question really as how many MB can I run off one power supply if there are no extra HD on the extra MB) and similar practical ideas. And thank you *very* much to Dragokatzov for asking this question - its given me something to think about instead of going out and buying another 5 second hand boxes. River~~ |

|

BennyRop Send message Joined: 17 Dec 05 Posts: 555 Credit: 140,800 RAC: 0 |

I've seen the descriptions of how to setup "headless crunchers" for FaH; but opted for replacing my work system with a dual core cpu instead of experimenting with headless crunchers. Don't some of the other BOINC clients have writeups on how to setup "headless crunchers" to use PXE to boot up and run their client? Then all you should need to do is match the headless machines to Rosetta's requirements and change the client used. |

Paul D. Buck Paul D. BuckSend message Joined: 17 Sep 05 Posts: 815 Credit: 1,812,737 RAC: 0 |

Or even better, would someone like to write an article for the Wiki on getting from a typical Linux distro up to a diskless stack of this kind? Since he has the most recent experience, perhaps River~~ might do it ... |

spacemeat spacemeatSend message Joined: 15 Dec 05 Posts: 1 Credit: 961,743 RAC: 0 |

Sounds interesting. Ive already got Linux going on my LAN, but so far with several separate boxes and fixed IP addresses. Can you recommend a good link to get me going in DCHP, TFTP, NFS & PXE, none of which I've used before? http://www.gentoo.org/doc/en/diskless-howto.xml |

|

TPR_Mojo Send message Joined: 20 Sep 05 Posts: 4 Credit: 684,947 RAC: 0 |

Rather than look into a HOW-TO, just download a preconfigued Linux distro designed for this. I use K12LTSP from K12LTSP.org and it runs diskless nodes fine on BOINC projects right out of the box. Configuration help can be found at my team's site http://forums.teamphoenixrising.net |

networkman networkmanSend message Joined: 19 Jan 06 Posts: 1 Credit: 1,004,646 RAC: 0 |

Hmm.. interesting.. "Beer is proof that God loves us and wants us to be happy." - Benjamin Franklin ---

|

Message boards :

Number crunching :

BOINC over a cluster

©2025 University of Washington

https://www.bakerlab.org