Maximum CPU time Exceeded...How about some granted credit!

Message boards : Number crunching : Maximum CPU time Exceeded...How about some granted credit!

| Author | Message |

|---|---|

The Gas Giant The Gas GiantSend message Joined: 20 Sep 05 Posts: 23 Credit: 58,591 RAC: 0 |

I've had a few wu's recently where the computation errors out with Maximum CPU time exceeded. These typically have taken 6 hrs or so and have basically lost about two days of cpu time because of it (these wu's were not all the _205_ batch of wu's). Any chance of getting credit for these wu's? Live long and crunch. PPaul (S@H1 8888)    Do as I say, not as I do! |

Tern TernSend message Joined: 25 Oct 05 Posts: 576 Credit: 4,700,857 RAC: 9 |

Paul, I don't know for sure - but I have certainly asked that they be granted credit, regardless of "WU name". The problem is, I believe, that the "maximum_cpu_time" is not a flat number of seconds, but is adjusted by your benchmarks and DCF. I have Rosetta WUs that run 20 hours or more on a slow Mac, and don't get this error - but the _expected_ time is close to that. Meanwhile, fast PCs that have had a bunch of the "short" WUs (driving down the DCF) get one that runs 6 hours, which normally might be okay, and it's suddenly an error. The max time was set to avoid WUs that run forever, and normally this would be a good thing - it's just in combination with all the "short" computation-error WUs that it's not working as it should. We'll probably know more in a day or two.

|

River~~ River~~Send message Joined: 15 Dec 05 Posts: 761 Credit: 285,578 RAC: 0 |

All i will add to Bill's comment is make sure the failed results are allowed to be returned to the server -- if anyone is in this situation and is tempted to reset the project, or to detach, then please wait at lest till the failed wu disappears naturally from the Work window. For thechnical reasons it will be very difficult for the project team to grant credit for results that have not been reported as failed. For those that are reported, most likely outcome is that you will eventually get credit equal to the credit claimed, but this is a guess rather than a promise. R~~ |

The Gas Giant The Gas GiantSend message Joined: 20 Sep 05 Posts: 23 Credit: 58,591 RAC: 0 |

Just had another wu error out at 5hrs 10min on my 3.2GHz@3.64GHz P4 HT machine. This is the first time this machine has done Rosetta in a while due to a problem with cpdn deadlines and an early sulphur wu. The wu that error'd out was the 2nd or 3rd wu it had completed and true to Bill's comments the first ones were fairly quick and the DCF had dropped a fair amount. I must admit I am using the optimised BOINC client as well as I am also running the optimised SETI@home application. So your comment about the max cpu time being associated with the bm's and DCF makes sense. This is an interesting cunnundrum for folks doing multiple projects with one of them being SETI@home and using the optimised client/app. I'll have to think about whether it's worth while to continue running Rosetta from both the projects' and my perspective. I don't want good wu's erroring out and I don't want to loose credit, but I do want to continue running Rosetta. Can the project maybe look at increasing the max cpu figure and ignore the DCF since wu times are all over the place. Live long and crunch and a very happy New Year to all, ps. Where did the background in my sig disappeat to? PPaul (S@H1 8888)    Do as I say, not as I do! |

Tern TernSend message Joined: 25 Oct 05 Posts: 576 Credit: 4,700,857 RAC: 9 |

This is an interesting cunnundrum for folks doing multiple projects with one of them being SETI@home and using the optimised client/app. The DCF is "per project" - you can have a 0.2 DCF for SETI and a 1.5 DCF for Rosetta. No problems there, I have almost exactly that on my Mac Mini with Altivec-optimized SETI and very-slow Rosetta Mac app. Problem comes in when you get some very short _Rosetta_ WUs, followed immediately by a long one. That and I suspect that in trying to minimize the harm from the "sticking at 1%" WUs, they set the "maximum time on the Cobblestone reference computer" pretty low.

|

Snake Doctor Snake DoctorSend message Joined: 17 Sep 05 Posts: 182 Credit: 6,401,938 RAC: 0 |

This is an interesting cunnundrum for folks doing multiple projects with one of them being SETI@home and using the optimised client/app. I just got one of these myself for WU "MORE_FRAGS_1r69_222_4322_0". It seems kind of strange to implement a solution on a global basis to fix a local issue like sticking at 1%. The 1% issue is more often than not people not properly setting up the system to either keep the WU in memory during a switch, or not setting switching to a long enough period of time. This is a user problem, not a Rosetta problem. My system just lost over 7 hours of time on this WU when it failed because of the CPU time issue. I have had WU in the past that ran for over 20 hours without an issue. Unfortunately I just upgraded to Boinc 5.2.13 and got my G4 Dual crunching this project again after a month of chasing down the problem that was keeping it out of the loop. Every other WU in my Queue is a "MORE_FRAGS" followed by a "NO_BARCODE_FRAGS" with a few "NEW_SOFT_CENTROID_PACKING" and other types thrown in for good measure. If the project simply has to implement a global solution, then perhaps assuring that a particular system only gets a specific type of WU based on time to process would be a better way to go. Clearly with such divergent processing times this issue will continue to get worse. Regards Phil EDIT: perhaps you could check more than just the CPU time a WU is taking before erroring it out. Maybe look at the percent complete as well and then decide if it is stuck or just taking a while, might be a bettter way to go.  We Must look for intelligent life on other planets as, it is becoming increasingly apparent we will not find any on our own. |

The Gas Giant The Gas GiantSend message Joined: 20 Sep 05 Posts: 23 Credit: 58,591 RAC: 0 |

I went into the client_state file and increased he DCF figure for Rosetta and the problem has gone away for the moment on the machine in question. Another machine currently has a wu in progress (INCREASE_CYCLES_10_1dtj_226_4391_1) that is at 2hrs23min cpu time and is only 30% done with another 3hr54min to completion. Prior to this wu I had a few wu's take around 2hrs only, so it looks as though this may error out as well, but I'll keep an eye on it. I tend to agree with Snake Doctor. Only error the wu out if the progress % is not increasing/has not increased after the cpu time reaches say 20% of the estimated To Completion time. Live long and crunch. ps. It's to see the background on my sig came back. PPaul (S@H1 8888)    Do as I say, not as I do! |

|

J D K Send message Joined: 23 Sep 05 Posts: 168 Credit: 101,266 RAC: 0 |

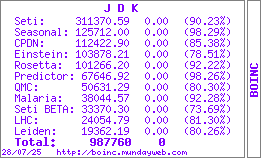

Am running the optimized client and app and have no problems; running SETI, Rosetta and Predictor, on a P4 3.0 ht enabled, 1 gig mem BOINC Wiki

|

The Gas Giant The Gas GiantSend message Joined: 20 Sep 05 Posts: 23 Credit: 58,591 RAC: 0 |

At the risk of opening myself up to ridicule and derision over the amount of optimisation and oc and hence higher bm's my machines have, below is a list of wu's that have errored out, https://boinc.bakerlab.org/rosetta/workunit.php?wuid=4346998 https://boinc.bakerlab.org/rosetta/workunit.php?wuid=4471098 https://boinc.bakerlab.org/rosetta/workunit.php?wuid=4016382 https://boinc.bakerlab.org/rosetta/workunit.php?wuid=4099470 https://boinc.bakerlab.org/rosetta/workunit.php?wuid=4151210 https://boinc.bakerlab.org/rosetta/workunit.php?wuid=4151110 https://boinc.bakerlab.org/rosetta/workunit.php?wuid=4151020 https://boinc.bakerlab.org/rosetta/workunit.php?wuid=4428403 https://boinc.bakerlab.org/rosetta/workunit.php?wuid=4209811 https://boinc.bakerlab.org/rosetta/workunit.php?wuid=4363700 https://boinc.bakerlab.org/rosetta/workunit.php?wuid=4428129 https://boinc.bakerlab.org/rosetta/workunit.php?wuid=4444790 https://boinc.bakerlab.org/rosetta/workunit.php?wuid=3760889 On quite a few of these wu's I am not the only person to run into trouble. This is a lot of cpu time that has been lost. Live long and crunch. |

|

Grutte Pier [Wa Oars]~MAB The Frisian Send message Joined: 6 Nov 05 Posts: 87 Credit: 497,588 RAC: 0 |

Already any news on crediting time-exceeding WU's ? |

Snake Doctor Snake DoctorSend message Joined: 17 Sep 05 Posts: 182 Credit: 6,401,938 RAC: 0 |

Already any news on crediting time-exceeding WU's ? No news from the project yet on these, but they are still working on a different problem right now. With any luck they will decide that these "CPU TIME EXCEEDED" WUs will get credit. The problem is clearly a bug in the way they have implemented a solution for a different problem, not a user or client issue. A number of us are losing a lot of CPU time processing these and they would probably complete successfully if not aborted by the system. I have seen some WUs run for as long as 22 hours in the past. TO arbitrarily decide that anything taking longer than 7 hours is stuck is just not right. Hopefully someone from the project will chime in on this issue soon. I am seeing almost as many "Exceeded" WUs as successful ones at this point. Regards Phil  We Must look for intelligent life on other planets as, it is becoming increasingly apparent we will not find any on our own. |

![View the profile of [B^S] Paul@home Profile](https://boinc.bakerlab.org/rosetta/img/head_20.png) [B^S] Paul@home [B^S] Paul@homeSend message Joined: 18 Sep 05 Posts: 34 Credit: 393,096 RAC: 0 |

Got a couple of these myself... hopefully they can fix the problem! cheers, Paul Wanna visit BOINC Synergy team site? Click below!  Join BOINC Synergy Team |

The Gas Giant The Gas GiantSend message Joined: 20 Sep 05 Posts: 23 Credit: 58,591 RAC: 0 |

And BOINC doesn't increase the estimated cpu time when a wu errors out at the higher cpu time which doesn't help. |

The Gas Giant The Gas GiantSend message Joined: 20 Sep 05 Posts: 23 Credit: 58,591 RAC: 0 |

Looks like some of the wu's are now getting deleted. Is anything going to happen with this? |

Tern TernSend message Joined: 25 Oct 05 Posts: 576 Credit: 4,700,857 RAC: 9 |

Looks like some of the wu's are now getting deleted. Is anything going to happen with this? I don't know about any specific ones - I know they said in another thread that deleted WUs weren't a problem because they were archived, and that credit would be granted based on the archived stuff on Monday. No idea which ones will GET credit... I'm certainly hoping they err on the side of "give too much" rather than "give too little".

|

River~~ River~~Send message Joined: 15 Dec 05 Posts: 761 Credit: 285,578 RAC: 0 |

Me too... and the project folks know that is what we are hoping As credit is purely for the benefit of the donors and as nobody has posted anything objecting to benefit-of-the-doubt credits, then the only real reason to withold credit would be if it was a really rare case and the code to cover that case was especially hard. We will all know on Monday, Seattle time - (noting that Monday in Seattle will be Tuesday for some of us...) |

Snake Doctor Snake DoctorSend message Joined: 17 Sep 05 Posts: 182 Credit: 6,401,938 RAC: 0 |

I would hope that in addition to granting credit for these (which, as you point out, is strictly a "give me" to the user community), that someone is working on a better fix for the 1% solution than simply deciding that if a WU has run for a certain period of time then it must be stuck. Not one of the WUs that have run out of time on my system were stuck. I think every one of them would have run to a successful conclusion had they been allowed to complete. This root problem is causing a waste of project working time and has a direct impact on the amount of science that can be done per unit time. Interestingly I am seeing a number of other systems failing on the same WUs I am seeing as "too much time". Most of the PC systems fail with some kind of exception error on these WUs so on the surface it looks like a different problem. I suspect however they are related. It just produces a different error on the Mac than on the PC. Thanks Bill for chiming in on this. I hope you can bring the root issue to the attention of the Project team. All they need to do here is check Both the percent complete as well as the CPU time to determine if a WU is actually stuck or not. Right now it looks as though all they are looking at is CPU time. Regards Phil  We Must look for intelligent life on other planets as, it is becoming increasingly apparent we will not find any on our own. |

River~~ River~~Send message Joined: 15 Dec 05 Posts: 761 Credit: 285,578 RAC: 0 |

hi Phil, yes the project team are well aware of this and a number of other issues - which one they prioritise is a moot point but we should not see any of these errors again once the last stragglers come through. Bill does email them from time to time with outstanding issues, as you suggested. R~~ |

The Gas Giant The Gas GiantSend message Joined: 20 Sep 05 Posts: 23 Credit: 58,591 RAC: 0 |

Just to iterate a point(s) here. None of the wu's that I have listed have been stuck at 1%! They were all at least 50% of the way through and some as high as 90% when they were automatically aborted due to the maximum cpu time. The only wu that was stuck at 1%, I aborted after 9hrs of crunching (overnight) and is the only wu to have exceeded 6 or 7hrs and not automatically abort. I have since read that if you stop and restart boinc there is a very high likelihood that the wu will complete successfully for wu's that have been stuck at 1%. Live long and crunch. Paul. |

Snake Doctor Snake DoctorSend message Joined: 17 Sep 05 Posts: 182 Credit: 6,401,938 RAC: 0 |

Just to iterate a point(s) here. None of the wu's that I have listed have been stuck at 1%! They were all at least 50% of the way through and some as high as 90% when they were automatically aborted due to the maximum cpu time. The only wu that was stuck at 1%, I aborted after 9hrs of crunching (overnight) and is the only wu to have exceeded 6 or 7hrs and not automatically abort. I have since read that if you stop and restart boinc there is a very high likelihood that the wu will complete successfully for wu's that have been stuck at 1%. Paul, This has been precisely my experience as well. All of the WUs have been aborted by the system during an otherwise normal run. Usually this is at around 80-90% after 6-7 hours CPU time. I have just this morning had to abort one myself that was stuck at 1% but it is the first time I have seen one of these. It was easy to tell it was stuck by a simple examination of the Activity Monitor. Regards Phil  We Must look for intelligent life on other planets as, it is becoming increasingly apparent we will not find any on our own. |

Message boards :

Number crunching :

Maximum CPU time Exceeded...How about some granted credit!

©2025 University of Washington

https://www.bakerlab.org