Problems and Technical Issues with Rosetta@home

Message boards : Number crunching : Problems and Technical Issues with Rosetta@home

Previous · 1 . . . 37 · 38 · 39 · 40 · 41 · 42 · 43 . . . 55 · Next

| Author | Message |

|---|---|

|

Terminal* Send message Joined: 23 Nov 05 Posts: 6 Credit: 7,845,878 RAC: 0 |

I have a few 40 core machines i'm trying to get work on, and they just keep getting refused new work :( Do you guys take local hardware donations? I could part with several Poweredge 2950's. Most of the links on https://boinc.bakerlab.org/rosetta/rah_donations.php are broken links. |

Greg_BE Greg_BESend message Joined: 30 May 06 Posts: 5770 Credit: 6,139,760 RAC: 0 |

Oh! Well that is good news then. I guess it was just a bit of bad timing on our end here in Europe. Here's to more smooth running in the future. I fired up more make_work daemons to hopefully catch up with the work demand. We have plenty of work queued up but our daemons were having trouble catching up. Hopefully the updates I just made will help. |

|

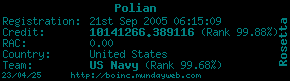

Polian Send message Joined: 21 Sep 05 Posts: 152 Credit: 10,141,266 RAC: 0 |

|

|

googloo Send message Joined: 15 Sep 06 Posts: 137 Credit: 23,975,196 RAC: 8 |

I'm running out of Rosetta tasks again. Ready to send 22 |

|

Sid Celery Send message Joined: 11 Feb 08 Posts: 2541 Credit: 47,118,286 RAC: 389 |

Just mopping up the last couple of the 55 WCG tasks I had to grab on Sunday morning when the scheduler was switched off. I've returned 15 Rosetta tasks already since midnight, but only 1 has validated. The other 14 are still showing as pending - some from 15 hours ago. The validators are showing as running on the server status page. Edit: And struggling to get tasks, just as googloo reports. 2726 ready to send. In progress very low at 305k compared to a high of up to 2-3m during the last week

|

|

Sid Celery Send message Joined: 11 Feb 08 Posts: 2541 Credit: 47,118,286 RAC: 389 |

Tasks now coming down and all delayed validation up to date. Thanks all

|

David E K David E KVolunteer moderator Project administrator Project developer Project scientist Send message Joined: 1 Jul 05 Posts: 1480 Credit: 4,334,829 RAC: 0 |

Is anyone still having issues getting work? |

|

googloo Send message Joined: 15 Sep 06 Posts: 137 Credit: 23,975,196 RAC: 8 |

Is anyone still having issues getting work? Better now, thanks. 8/11/2014 12:28:31 PM | rosetta@home | Scheduler request completed: got 20 new tasks |

|

Sid Celery Send message Joined: 11 Feb 08 Posts: 2541 Credit: 47,118,286 RAC: 389 |

Is anyone still having issues getting work? I thought I was, but I think this is the definition of no: 11/08/2014 23:50:48 | rosetta@home | update requested by user

|

|

TPCBF Send message Joined: 29 Nov 10 Posts: 111 Credit: 6,042,546 RAC: 24 |

Is anyone still having issues getting work?No, now I am getting new WUs, more than before, but for some strange reasons, SETI@HOME,which "shares" the CPU cycles on that machine "isn't getting any"... :-( |

|

Mod.Sense Volunteer moderator Send message Joined: 22 Aug 06 Posts: 4018 Credit: 0 RAC: 0 |

Is anyone still having issues getting work?No, now I am getting new WUs, more than before, but for some strange reasons, SETI@HOME,which "shares" the CPU cycles on that machine "isn't getting any"... :-( This is normal. BOINC Manager is trying to maintain your defined resource share between the two projects. When your machine went without R@h work for a period of time your machine was completing more work for SETI than your resource share would normally have allowed for SETI. And so SETI got ahead of schedule (where "schedule" is relative to your defined resource shares). So, to reestablish the balance between the two, now the BOINC Manager will request additional work from R@h to even things out. Since it was only a day or two of getting behind, it should only take a day or two for the two projects to reach parity again and for you to get back to a stream of work in proportion to the configured resource shares. Rosetta Moderator: Mod.Sense |

|

neil Send message Joined: 22 Dec 06 Posts: 3 Credit: 18,395,041 RAC: 63 |

Greetings: I'm not convinced this is 'normal'. My PC runs Rosetta and World Community Grid (WCG) currently. If I set Rosetta to 'No new tasks' mode and let the system catch up, meaning compute all of the Rosetta work, then let the PC run nothing but WCG for a week or two, all I have to do is set Rosetta to 'Allow new tasks' and let it run for 24 to 48 hours before the system is swamped with Rosetta tasks and WCG is getting all but completely shut out. The Rosetta jobs all start 'Running, high priority' as the system comes to them and move to the top of the queue. I don't think Rosetta plays well with other projects. If I had to guess, not being extremely knowledgeable of how the Boinc algorithm works, I'd say it's because of the short deadlines assigned to work units. The majority of the Rosetta and WCG work units have similar estimated run-times. As it now stands on my system, I enabled Rosetta again yesterday, I have 175 Rosetta units and 64 WCG units. Maybe I'm all wet, but it does appear that Rosetta is much more aggressive in it's share of resources than WCG, in this case (I've run other projects with it as well). I'm getting close to removing Rosetta from my system because of this. My two cents... |

|

Miklos M Send message Joined: 8 Dec 13 Posts: 29 Credit: 5,277,251 RAC: 0 |

I am getting the extra long timed wu's and a couple, so far resulted in errors. I did not abort any units, at least not lately. My computer is set for one day time limit per unit, but these units seem to need up to 50 hours per cpu. |

|

Murasaki Send message Joined: 20 Apr 06 Posts: 303 Credit: 511,418 RAC: 0 |

Greetings: Yep, sounds normal to me. From your description the debt imbalance between WCG and Rosetta is skewed. You may have had a time when your system was only able to do WCG tasks (for example, during the Rosetta server strain at the start of August) and your client is now trying to compensate by downloading more Rosetta work. Depending on how BOINC is interpreting the "no new tasks" command you are just maintaining the existing problem (freeze the comparative debt levels between the two projects) or making the situation worse (Rosetta's debt increases while tasks are frozen). I don't think Rosetta plays well with other projects. If I had to guess, not being extremely knowledgeable of how the Boinc algorithm works, I'd say it's because of the short deadlines assigned to work units. I run Rosetta and WCG too and don't see any of the problems you describe. In fact, Rosetta usually gives a week longer than WCG for task deadlines. I'd suggest setting "no new tasks" for both projects to finish all existing work then remove both projects and reattach. That should clear any debt issues for both projects. Alternative solutions would be to:

yep, that happened to me as well. |

|

Mod.Sense Volunteer moderator Send message Joined: 22 Aug 06 Posts: 4018 Credit: 0 RAC: 0 |

Maybe I'm all wet, but it does appear that Rosetta is much more aggressive in it's share of resources than WCG, in this case (I've run other projects with it as well). As described by others, there are many factors that go in to which projects the BOINC Manager chooses to run and to request work from. But it's important to keep in mind that it is the BOINC Manager that "decides", not the projects. It is trying to optimize what can be conflicting goals. The only thing I can think of that might cause it to look like R@h is overtaking other projects is that some R@h tasks do have an extended time (over an hour) between checkpoints. In order to preserve and maximize the work your machine produces, the BOINC Manager will sometimes wait to interrupt a task until it reaches a checkpoint. But this would not cause an effect over the course of days. Another possibility is that R@h tasks use significantly more memory than many other projects. Depending on how your BOINC Manager is configured for the amount of memory it is allowed to use, it might get knee deep in to a R@h tasks that needs more memory to complete and in order to make that possible the BOINC Manager might hold other tasks until the memory usage goes back down. The BOINC Manager also can play by different rules when a project is marked for no new work, when tasks are suspended, when it's estimates of runtimes are off, and etc. I'm certainly *NOT* calling you all wet, but please don't make the current observations be the reason for withdrawing your support of the project. There is a moderator (the BOINC Manager) between the project and getting machine resources. It is certainly possible it is not doing the best job at it, but more likely just that it is looking at the debts and etc. and actually trying to enforce the resource shares that you set up between the projects. Rosetta Moderator: Mod.Sense |

|

Miklos M Send message Joined: 8 Dec 13 Posts: 29 Credit: 5,277,251 RAC: 0 |

683255938 619208054 15 Aug 2014 7:50:59 UTC 25 Aug 2014 17:55:37 UTC Over Validate error Done 67,088.14 --- --- The errors keep coming. Perhaps time to delete those new 48+ hour wu's. |

|

Murasaki Send message Joined: 20 Apr 06 Posts: 303 Credit: 511,418 RAC: 0 |

683255938 619208054 15 Aug 2014 7:50:59 UTC 25 Aug 2014 17:55:37 UTC Over Validate error Done 67,088.14 --- --- You seem to have a mix of units with different types of errors. I have reported your issues with long file names on the Minirosetta 3.52 thread. You may want to report the different types of issues you are seeing there. Edit: I see you mentioned the long task name already a couple of posts above me in that thread. Looking through your recent tasks there are only a few validate/compute errors caused by the system that I can see. However, a large number of errors were reported as tasks aborted by the user. I expect it will be useful to explain further why you aborted them. Were they not checkpointing? Were they all running at x% longer than your preferred run time as you mentioned above? |

|

Miklos M Send message Joined: 8 Dec 13 Posts: 29 Credit: 5,277,251 RAC: 0 |

I aborted them last night since it seems all the 48+hour ones were in some way ending in errors. The usual 24-25 hour ones have no problems. I am waiting until Rosetta sends the latter ones again. 683255938 619208054 15 Aug 2014 7:50:59 UTC 25 Aug 2014 17:55:37 UTC Over Validate error Done 67,088.14 --- --- |

David E K David E KVolunteer moderator Project administrator Project developer Project scientist Send message Joined: 1 Jul 05 Posts: 1480 Credit: 4,334,829 RAC: 0 |

I am getting the extra long timed wu's and a couple, so far resulted in errors. I did not abort any units, at least not lately. My computer is set for one day time limit per unit, but these units seem to need up to 50 hours per cpu. What are the names of these workunits? Sorry if you've mentioned them before below. I just can't find them. Thanks! If there are bad work units that you believe are general errors resulting from a bug, please feel free to email me directly with the name and id of the work unit, the name is enough though. dekim at u dot washington dot edu |

|

Sid Celery Send message Joined: 11 Feb 08 Posts: 2541 Credit: 47,118,286 RAC: 389 |

I also seem to have massive problems with downloading After a few months, it's back again... :(

|

Message boards :

Number crunching :

Problems and Technical Issues with Rosetta@home

©2026 University of Washington

https://www.bakerlab.org