Report stuck & aborted WU here please - II

Message boards : Number crunching : Report stuck & aborted WU here please - II

Previous · 1 . . . 4 · 5 · 6 · 7 · 8 · 9 · Next

| Author | Message |

|---|---|

|

Delk Send message Joined: 20 Feb 06 Posts: 25 Credit: 995,624 RAC: 0 |

https://boinc.bakerlab.org/rosetta/result.php?resultid=17550826 Stuck so I've aborted. The truncate_* units were removed due to a problem why not the FA_* units? The only FA units still going around are broken from what I've seen so its really annoying since they dont timeout at all. You want more people on the project, quality needs to be improved to reduce churn. I'm not just talking about workunit or app issues (ala 4.97), but also things like the down for maintenance notice today, what about at least 24 or 48 hrs notice? This really isn't anything new, change control processes (which include client communication) are something a lot of us deal with every working day. My 2 cents.

|

|

Laurenu2 Send message Joined: 6 Nov 05 Posts: 57 Credit: 3,818,778 RAC: 0 |

But what about the removal of bad WU's from your servers You must set up a way to stop the resending out of the BAD WU's Letting the system purge it self is not right. You have the capability to do auto upgrades you should have the capability to auto abort bad WU;s on client side To let bad WU's run on yours or our system is a BAD THING Well David it seems you can not or did not REMOVE the bad WU's I and others are still getting them I just found this one TRUNCATE_TERMINI_FULLRELAX_1b3aA_433_126_0 that WASTED another 28 more Hrs This is not good I am nearing the end of my patients with these BAD jobs and the THOUSANDS + of Hrs of wasted work time that you will not give points for David I AM VARY UPSET ABOUT THIS If You Want The Best You Must forget The Rest ---------------And Join Free-DC---------------- |

|

Mike Gelvin Send message Joined: 7 Oct 05 Posts: 65 Credit: 10,612,039 RAC: 0 |

Aborted 1.04% https://boinc.bakerlab.org/rosetta/result.php?resultid=17045924

|

|

Jose Send message Joined: 28 Mar 06 Posts: 820 Credit: 48,297 RAC: 0 |

[/quote] It looks like maybe "Rhiju's" error trap worked and terminated the Work Unit. If so it should claim some credit.[/quote] Well My account states that for that work unit the following credits were claimed but not granted 17504849 14375734 18 Apr 2006 9:59:32 UTC 18 Apr 2006 21:53:49 UTC Over Client error Computing 32,858.34 101.95 --- See the issue for me goes past the credit stuff [Although I would be dishonest if I don't admit I want all the credits posible added to my team totals as we are facing a vicious stampede by some very annoying cows ( LOL LOL LOL ..Yes I have a sense of humor) ] : it is seeing all that precious computing time not generating useful work that is worrying me. BTW my life partner is considering suing Rosetta@home for loss of consortium... Partner claims I am addicted to the screen saver and that I am becoming slightly more nuttier than when we met. :P This and no other is the root from which a Tyrant springs; when he first appears he is a protector.†Plato |

dag dagSend message Joined: 16 Dec 05 Posts: 106 Credit: 1,000,020 RAC: 0 |

https://boinc.bakerlab.org/rosetta/workunit.php?wuid=11846000 FA_RLXpt_hom006_1ptq__361_479 1.04%, 17+ hours... takes a lickin' and keeps on tickin'! This will be a good test to see if credit will be eventually awarded as was stated elsewhere in this thread. dag dag --Finding aliens is cool, but understanding the structure of proteins is useful. |

|

CremionisD Send message Joined: 10 Mar 06 Posts: 9 Credit: 37,604,006 RAC: 0 |

Workunit aborted manually. "Truncate_termini_fullrelax_1b3a_433_628_0" - Model 1, step 241723, at 1.04% CPU time ~24:30:00 Result ID = 17022725, (Workunit = 13954214) |

|

tralala Send message Joined: 8 Apr 06 Posts: 376 Credit: 581,806 RAC: 0 |

Such a feature exists and was recently employed from cpdn.org to reset the faulty models they send out. It's called "reverse trickle" or "killer trickle". But it still needs a contact from the client in order to respond with a "killer trickle". However every contact should do. |

|

Rhiju Volunteer moderator Send message Joined: 8 Jan 06 Posts: 223 Credit: 3,546 RAC: 0 |

Based on the great advice from this forum, I coded a "watchdog" thread for Rosetta@home. It will output any data and abort work units that haven't changed their score in thirty minutes -- a pretty good indicator that the job is stuck! I'll be testing this over on RALPH over the next couple days. I'm also thinking of putting in an abort if the CPU time is more than twice the maximum time for the workunit (typically 4 hours by default these days, or whatever the client's preference)... that's another sign that the workunit is not compatible with the client. Are there any other conditions under which you think we should abort? Looking forward to some more advice. I think we're on the way to finally bringing an end to these stuck jobs.

|

|

Jose Send message Joined: 28 Mar 06 Posts: 820 Credit: 48,297 RAC: 0 |

My apologies if I sounded like I was bitching. I better take a break from the screen...but drat...those amino acid chains dancing all over the screen are so addictive :) Peace and ty for all your effort to make this project an efficient one. This and no other is the root from which a Tyrant springs; when he first appears he is a protector.†Plato |

|

Laurenu2 Send message Joined: 6 Nov 05 Posts: 57 Credit: 3,818,778 RAC: 0 |

I have seen the above post But You obviously missed the post from me where I said the auto abort at 48Hrs is not working "...Well David I let this one FA_RLXpt_hom002_1ptq__361_380_3 run through 48 HR it did not self abort as you said it would and send it self in IT JUST RESTARTED and it most likely was the 3rd time it restarted. That is 142 Hrs of wasted CPU time ." I think I aborted it at 7.5Hrs But I was watching all the way through 48 Hrs And then the clock went to 00:00 So I know for a fact that it Had at least 55 Hrs that I know of and it might have done the loop 5 X But you only grant credit for the 7.5 Hrs recorded after the loop reset So Not only is the 48 Hrs auto abort not working the way you want it to the granting of credit not working the way it should either And also this post where I said "... I'm sorry I can not, I looked for it but could not find it. For me your system for tracking WU is hard to use for me It might work OK for me if I had only a few nodes working this. But I have over 50 nodes working this project, jobs get lost with so many pages of WU's It might help if you put in page numbers 1 to 10 20 30 40 50 instead of Just NEXT PAGE If you want to tell me how to get the info you want I will try to retrieve it for you . Is there a file in my Boinc folder that I can open to get this Info I looked through hundreds of pages of returned WU And that was only 3 days worth (VARRY HARD to work with for a power user) I still do not know how all the other posters are getting and posting the links they are So you tell me what you want me to do, or to retreave from my network Just remember I am NOT in the IT feild so please be kind If You Want The Best You Must forget The Rest ---------------And Join Free-DC---------------- |

|

Laurenu2 Send message Joined: 6 Nov 05 Posts: 57 Credit: 3,818,778 RAC: 0 |

[quoteAs a personal asside I might point out that the usefulness of your posting is directly related to the information you provide concerning the problem. Could you at least provide a link to the result ID so the problem can be examined?[/color][/b] [/quote] Ok after spending the last 1.5 Hrs looking I found it Note the time sent and the time sent back over 104 that meens this job was on it's 3rd loop 48 + 48 + 7 Now look at the granted points 8.25156419314919 for for a job that ran for 104 Hrs Now you can see why I was saying your Auto abort and your granting due credit is not working And the Real need to find a way to purge BAD WU's from the Rosetta Servers and the members clients. It is unfaire for Rosetta to make us members pay the bills to purge the system of your Bad WU's Result ID 17079919 Name FA_RLXpt_hom002_1ptq__361_380_3 Workunit 11796498 Created 12 Apr 2006 9:46:52 UTC Sent 12 Apr 2006 11:29:20 UTC Received 16 Apr 2006 19:13:28 UTC Server state Over Outcome Client error Client state Computing Exit status -197 (0xffffff3b) Computer ID 178772 Report deadline 26 Apr 2006 11:29:20 UTC CPU time 1716.296875 stderr out <core_client_version>5.2.13</core_client_version> <message>aborted via GUI RPC </message> <stderr_txt> # random seed: 2485491 # random seed: 2485491 # random seed: 2485491 # random seed: 2485491 </stderr_txt> Validate state Invalid Claimed credit 8.25156419314919 Granted credit 8.25156419314919 application version 4.98 If You Want The Best You Must forget The Rest ---------------And Join Free-DC---------------- |

|

AMD_is_logical Send message Joined: 20 Dec 05 Posts: 299 Credit: 31,460,681 RAC: 0 |

Laurenu2, assuming you know which computer had the WU, you can find it by: click on "Participants" on any Rosetta@home web page click on "View computers" click on the computer ID for the computer that ran the WU click on the number on the "Results" line You will now have a list of results for just that computer. That WU was a nasty one. The first person who got it wasted 57 hours of CPU on it before noticing and aborting it, then the second person wasted another 14 hours. The third person let it sit in the queue until it went past deadline. Then you got it. At least now its had too many errors and won't be sent out any more. |

Snake Doctor Snake DoctorSend message Joined: 17 Sep 05 Posts: 182 Credit: 6,401,938 RAC: 0 |

As a personal asside I might point out that the usefulness of your posting is directly related to the information you provide concerning the problem. Could you at least provide a link to the result ID so the problem can be examined?[/color][/b] What is unfair is for people to bash the developers and the people who are trying to help you and others with the issues. The errors are frustrating for everyone most of all the project people. While I am sure it may be difficult for you to manage certain aspects of your farm if things aren't perfect, you built the farm. The work and expense is just part of what is required if you plan to run it. While you have made it very clear that the 30 or so credits you may have lost on this WU should be the single most important thing in the universe to the project, just try to get ANY of the credits for a failed work unit on ANY of the other projects. (and yes ALL projects have failed WUs) The Rosy people are doing what they can on the credit issue, and it is a lot more than what you get from other venues. But there are other things going on right now, so they only do it once a week. Why don't you just post the error, with sufficient information to help them fix the problem without all the bashing of the project people. It has already been explained that BOINC does not allow the project to remove WUs from your system and why. The only project that does this at all is CPDN and they are using a highly modified version of the BOINC client to do it. This project uses the standard BOINC package for everything, including the results pages. If you are having a problem with BOINC you should post the issue on the BOINC development site. As for looking up the links to your results, have you tried bookmarking a separate page in your browser for each machine? It is very easy to do. That way you can isolate the results by machine, and monitor them individually if something comes up. That should help you find things. Also, if you reduce your connection interval and increase your time setting you will have fewer WUs to look through. |

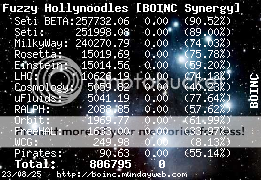

Fuzzy Hollynoodles Fuzzy HollynoodlesSend message Joined: 7 Oct 05 Posts: 234 Credit: 15,020 RAC: 0 |

Based on the great advice from this forum, I coded a "watchdog" thread for Rosetta@home. It will output any data and abort work units that haven't changed their score in thirty minutes -- a pretty good indicator that the job is stuck! I'll be testing this over on RALPH over the next couple days. I'm also thinking of putting in an abort if the CPU time is more than twice the maximum time for the workunit (typically 4 hours by default these days, or whatever the client's preference)... that's another sign that the workunit is not compatible with the client. Are there any other conditions under which you think we should abort? Looking forward to some more advice. I think we're on the way to finally bringing an end to these stuck jobs. Thanks! :-) A function to kill a WU, that's stuck because the time for swapping between the projects is too low for creating a new checkpoint (e.g. 1 hour), so the WU starts all over again and again, would be nice also. This could help people not wasting endless time on one WU for nothing, since keeping WU's in memory while preempted is not necessary anymore. [b]"I'm trying to maintain a shred of dignity in this world." - Me[/b]

|

Cureseekers~Kristof Cureseekers~KristofSend message Joined: 5 Nov 05 Posts: 80 Credit: 689,603 RAC: 0 |

some errors: https://boinc.bakerlab.org/rosetta/result.php?resultid=16591648 https://boinc.bakerlab.org/rosetta/result.php?resultid=16619607 Member of Dutch Power Cows |

|

Laurenu2 Send message Joined: 6 Nov 05 Posts: 57 Credit: 3,818,778 RAC: 0 |

Snake Doctor I am sorry If I am a little slower then most people here like you. I have my problems I will not go into right now But if you think Me trying to explain a Bug that I found (endless loop) is Bashing And posting the long letter below I think you are the one doing the bashing at me WHY It may be true that I am a little frustrated And perhaps it my show through but I was not basing I worked hard for Hrs trying to find that WU and learn how to find the info they wanted and I did post it Is that Bashing NO AGAIN my point here is the Rosetta system need to have in place a method to remove bad work on servers and clients To think this will not happen again is unwise 30 point hahaha I make over 17,000 points a day do you really think I am concerned with a Meir 30 points You need to get a reality check I am quite sure I have had around 1200 Hrs of CPU time stuck on these all the wasted time is what concerns me If You Want The Best You Must forget The Rest ---------------And Join Free-DC---------------- |

Cureseekers~Kristof Cureseekers~KristofSend message Joined: 5 Nov 05 Posts: 80 Credit: 689,603 RAC: 0 |

After more than 30 hours runtime, and stuck for hours at the same percentage, I aborted the job: https://boinc.bakerlab.org/rosetta/result.php?resultid=17454155 260 credits lost... Member of Dutch Power Cows |

Feet1st Feet1stSend message Joined: 30 Dec 05 Posts: 1755 Credit: 4,690,520 RAC: 0 |

Are there any other conditions under which you think we should abort? Looking forward to some more advice. I think we're on the way to finally bringing an end to these stuck jobs. What about when deadline is approaching? Sometimes people crank their preference straight from 4 hrs to 24 hrs, and all of the sudden 10 WUs cannot be completed before deadline. So, if deadline is "near" (? how near?) then just finish the current model and end this WU so it can be reported in time. I don't know if you would call that an "abort". It's more of a normal end, in advance of the target runtime. Great progress! Add this signature to your EMail: Running Microsoft's "System Idle Process" will never help cure cancer, AIDS nor Alzheimer's. But running Rosetta@home just might! https://boinc.bakerlab.org/rosetta/ |

Dimitris Hatzopoulos Dimitris HatzopoulosSend message Joined: 5 Jan 06 Posts: 336 Credit: 80,939 RAC: 0 |

AGAIN my point here is the Rosetta system need to have in place a method to remove bad work on servers and clients To think this will not happen again is unwise Lauren, I just read in the other threads, posts by Rhiju, Bin Qian and David Kim that:

If I understand the new features quoted above correctly, #3 and #4 should ensure that no WUs get stuck forever, until a human operator aborts them via BOINC. Hopefully, the BOINC server code (developed by Berkeley Univ as open source) will eventually implement the other features, most notably the capability / preference flags (e.g. >512M mem, BigWU etc), so that big WUs are sent only to PCs which are capable / willing to process them. PS: So, in light of recent changes, I'd say that a method to cancel bad WUs from volunteer PCs is much less of an issue, as bad WUs will take care of themselves. Best UFO Resources Wikipedia R@h How-To: Join Distributed Computing projects that benefit humanity |

|

tralala Send message Joined: 8 Apr 06 Posts: 376 Credit: 581,806 RAC: 0 |

That WU was a nasty one. The first person who got it wasted 57 hours of CPU on it before noticing and aborting it, then the second person wasted another 14 hours. The third person let it sit in the queue until it went past deadline. Then you got it. At least now its had too many errors and won't be sent out any more. Perhaps it is a good idea to turn off the mechanism of resending failed WU at the moment (ie by setting number of failures until cancellation of that WU to 1). With such a setting bad WUs would only bother one participant. |

Message boards :

Number crunching :

Report stuck & aborted WU here please - II

©2026 University of Washington

https://www.bakerlab.org