Result was reported too late to validate ????????????

Message boards : Number crunching : Result was reported too late to validate ????????????

| Author | Message |

|---|---|

|

Grutte Pier [Wa Oars]~MAB The Frisian Send message Joined: 6 Nov 05 Posts: 87 Credit: 497,588 RAC: 0 |

https://boinc.bakerlab.org/rosetta/result.php?resultid=11593259 Would like to know how something like this is possible, while de computer is running 24/7 and connected all the time. Do I have to check the queues myself everytime or what ? The rest of the jobs in the queue are either already sent every day or can be send in on 16 march and so on. https://boinc.bakerlab.org/rosetta/show_host_detail.php?hostid=88145 Furthermore I'm using a program such as boincmanager but with the possibility to auto-abort "jobs approaching/passed deadline". So I'm rather curious to know the reason for this ? I'm getting a bit tired of all these ????? errors. Hope our Stampede goes to another project otherwise one can expect a lot more problems |

|

Los Alcoholicos~Megaflix Send message Joined: 10 Nov 05 Posts: 24 Credit: 77,199 RAC: 0 |

Click to see the workunit results and you'll see that the person who originally had the workunit didn't report it back within the deadline limit. The workunit was sent to you, but before you finished it before YOUR deadline, the other user sent it in and was granted the credit. Then you sent it in, but your's came in second and wasn't granted any credit. |

|

Grutte Pier [Wa Oars]~MAB The Frisian Send message Joined: 6 Nov 05 Posts: 87 Credit: 497,588 RAC: 0 |

Thanks Megaflix . Funny way of crunching. I'm getting the WU and while mine is crunching it, another one sends it in before my computer is ready and so I'm crunching for nothing. Seems like a contest. Not my way of doing things. Just a waste of time and demotivating. My Cellie already over to F@h. The rest will follow. If our Stampede is going to be R@H I'm staying for a month and then that's it. |

|

BennyRop Send message Joined: 17 Dec 05 Posts: 555 Credit: 140,800 RAC: 0 |

That's not how Boinc and the validation system is supposed to be handling this; and it's one of the bugs they're working on tracking down and curing. Reporting the errors helps them track them down.. so continue, and you'll be helping all of us. And the more bugs we get tracked down and removed, the more fun can be had with challenges like the DPC vs. Free-DC Gauntlet on FaD. |

Angus AngusSend message Joined: 17 Sep 05 Posts: 412 Credit: 321,053 RAC: 0 |

It's another symptom of not using a quorum for granting credit, and screwing the user. Add this to the overgranting of credit to PCs that are enthusiatically reporting absurd benchmarks, and the project wonders why the CPU power on the project isn't growing... Proudly Banned from Predictator@Home and now Cosmology@home as well. Added SETI to the list today. Temporary ban only - so need to work harder :)  "You can't fix stupid" (Ron White) |

|

Rollo Send message Joined: 2 Jan 06 Posts: 21 Credit: 106,369 RAC: 0 |

How can you use a quorum in a system, in which each user can set a different run time for the _same_ work unit. A quorum simply make no sense at all. |

Angus AngusSend message Joined: 17 Sep 05 Posts: 412 Credit: 321,053 RAC: 0 |

The user-defined WU run time is another problem introduced by this project. If the user needs a specific run time on a WU, all copies of that WU should have the same run time, meaning it needs to be controlled on the server end, not the client end. The run time should be encoded in the WU, and not allowed to be changed once downloaded. If this means editing the WU after the first copy is downloaded, then that is something the project has to take on. Otherwise, the whole credit granting idea is down the drain, and with it any significant increased contribution of CPU power. I'll repeat what I've said before, and will continue to say - fair and equitable credit granting is crucial to the success of ANY DC project, BOINC or otherwise. The competition, not the science, is what brings the CPU power. Proudly Banned from Predictator@Home and now Cosmology@home as well. Added SETI to the list today. Temporary ban only - so need to work harder :)  "You can't fix stupid" (Ron White) |

Angus AngusSend message Joined: 17 Sep 05 Posts: 412 Credit: 321,053 RAC: 0 |

While it would be possible to develop a complex scheme of crediting to adjust for varying Work Unit time runs, it is in fact unnecessary. All of the complaints in this area are based on the use of so call "optimized" clients by some participants, which are in fact available to everyone equally. So the credit based argument for redundancy has no merit. That arguement only works if either EVERYONE or NO ONE is using the optimized BOINC client. It also doesn't address the ability to alter the benchmarks manually to the absurd levels that have been seen. Only a quorum that throws out the stupidly high credits will produce fairness in credit granting. Besides being wasteful of donated resources, and serving no scientific purpose for this type of project, redundancy significantly slows the return of science data, and increases the load on the servers. Not having participation also slows the science, doesn't it? For projects like Rosetta that are not highly funded or staffed this is an unnecessary overhead. While I have seen a few people claiming that the lack of redundancy will make droves of participants leave the project, the actual numbers do not bare out that argument. Not being highly funded or staffed is exactly the type of project BOINC is aimed at. That's pretty much all of them so far. Dr Baker himself has been complaining in the Science forum about the lack of incrased participation. When a reason is given that is valid in the DC community, that credit granted is hopelessly flawed in this project, the head-in-the-sand thing starts happening again - as demonstrated below: So considering the wasteful nature of redundancy, the lack of any system to compensate for Work Unit variability for credit awarding, The lack of any data reflecting any significant impact on project participation, the additional cost to the project to support an infrastructure that does not provide any direct science benefit, the ready availability to the user community of an equalizing solution to the issue, and the fact that the entire BOINC credit system is about to be converted to a system that would eliminate the need to use redundancy to solve the problem, I would not look for redundancy in the Rosetta project any time soon. Proudly Banned from Predictator@Home and now Cosmology@home as well. Added SETI to the list today. Temporary ban only - so need to work harder :)  "You can't fix stupid" (Ron White) |

Snake Doctor Snake DoctorSend message Joined: 17 Sep 05 Posts: 182 Credit: 6,401,938 RAC: 0 |

... I'll repeat myself again - ... Frequently used technique for a hollow argument or gratutious assertion. I can't help but notice that while I see many posts from you complaining about this issue, AND even a few saying you would leave the project, You seem to still be here, and you seem to still be running WUs. These facts kind of make your argument seem like baseless table pounding.  We Must look for intelligent life on other planets as, it is becoming increasingly apparent we will not find any on our own. |

Angus AngusSend message Joined: 17 Sep 05 Posts: 412 Credit: 321,053 RAC: 0 |

... I'll repeat myself again - ... Ignoring the part of the redundant redundancy, I'm not actually running any Rosetta WUs today, one box may have accidently grabbed some last week, and I certainly am not putting the 70+/- GHz on it that I once did. Oh - and I didn't see a requirement to be actively crunching to be able to post here... Proudly Banned from Predictator@Home and now Cosmology@home as well. Added SETI to the list today. Temporary ban only - so need to work harder :)  "You can't fix stupid" (Ron White) |

Dimitris Hatzopoulos Dimitris HatzopoulosSend message Joined: 5 Jan 06 Posts: 336 Credit: 80,939 RAC: 0 |

Angus, forgive me for being blunt, but frankly this constant bitching and moaning is getting a bit old. And counter-productive. Especially since you are twisting the facts IMHO. The user-defined WU run time is another problem introduced by this project. The variable-runtime-WU solution was actually the best possible solution, to solve Rosetta's pressing "1GByte per P4 per month" Internet traffic issue, an insurmountable hurdle for dialup contributors and people with traffic quotas and a big burden for multi-PC crunching-farm-at-home people like NightOwl. Only a quorum that throws out the stupidly high credits will produce fairness in credit granting. Quorum would immediately slash effective "science" TeraFLOPS to 1/3th (or even less). For those BOINC projects I checked, using redundancy slashes effective TFLOPS to 1/4th) of "raw" TeraFLOPS. Unless there were a valid "science" reason, do you really think that implementing quorum would attract 3x or 4x as many participants, to compensate for the loss? I agree that the possibility of claiming higher credits is a nuisance. But so are other issues, e.g. the fact that standard Linux BOINC underestimates credits by almost 50%. And if more and more users migrate to optimizing BOINC clients, the whole point will be moot, unless you suggest to further increase quorum, to by chance include some non-opt BOINCs in the mix. Where would it all end? Don't get me wrong, I'm also in favor in 100% fair credits for all BOINC projects. But IMHO there are other options that R@h can do wrt credits, without adverse impact on effective TFLOPS. Like running the CPU benchmark on the science app itself. Like assigning a credit to each model/step. Or, in addition to "top predictions", maybe do a rank of how many of one's models were "close" (as percentile) to native. etc etc the lack of incrased participation. I often take a peek at the BOINC stats and "hosts added last day". I have run practically all BOINC projects and the fact is that with exception of CPDN, Rosetta is the heaviest one on a system's resources (still is on memory and until very recently on Internet bandwidth). Plus the extra requirements, like "leave app in mem" etc. And the 1% etc issues some PCs have. Here we have a project doing some potentially groundbreaking science. A responsive science team, giving daily feedback to the "troops". And fixing things like bandwidth requirements etc. So, let's not miss the forest for the trees. Best UFO Resources Wikipedia R@h How-To: Join Distributed Computing projects that benefit humanity |

Angus AngusSend message Joined: 17 Sep 05 Posts: 412 Credit: 321,053 RAC: 0 |

Nobody in this thread said that there weren't other issues keeping people from jumping into Rosetta with both feet. This was a thread on validation and credit granting. Proudly Banned from Predictator@Home and now Cosmology@home as well. Added SETI to the list today. Temporary ban only - so need to work harder :)  "You can't fix stupid" (Ron White) |

|

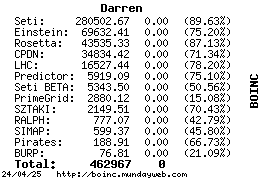

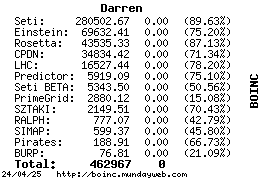

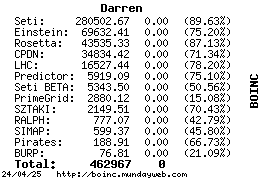

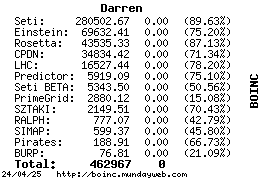

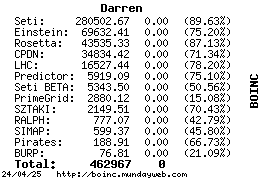

Darren Send message Joined: 6 Oct 05 Posts: 27 Credit: 43,535 RAC: 0 |

All of the complaints in this area are based on the use of so call "optimized" clients by some participants, which are in fact available to everyone equally. While I don't want to get in the middle of the personal dispute going on here, I kind of have some lingering doubt as to whether all the complaints are really pointing at legitimately optimized clients. I use an optimized client myself (the one made by Harold Naparst and available to anyone), but some of the benchmarks I've seen don't appear to be coming from any legitimate optimized clients. Now, I'm as far as could possibly be from being a computer programmer - and I couldn't interpret a single line of code if my life depended on it. That said, it took me well under 2 minutes to find a way to alter the code to get bogus benchmarks. This host is the one I created, with no difficulty whatsoever, with bogus benchmarks. Since I altered the code, I did let it run a single work unit for one hour (the shortest time possible) just to make sure the client really did still work properly and return results that would validate. The benchmarks on that host came from my first guess at making changes, and I didn't do too good as my whetstone is lower than a legitimate optimized client. But my dhrystone is much higher than legitimate. The end result was that my system that normally claims 10.125 cs/hour with a legitimate optimized client claimed 33 cs/hour after my changes - changes that are now built into the code so it will rebenchmark this way every time. THESE CHANGES ARE CLEARLY CHEATING, and I made these changes by doing nothing more than altering 1 single item in 2 files, but without some kind of a sanity check from rosetta ANYONE can cheat like this. And to show how completely absurd it can get, I then decided to go even further and see what it would give. I won't attach it unless there is some compelling need to prove the benchmarks it gives me now - as I would have to trash the work unit that would be initially assigned. However, here's the output from executing the absurd version (and there appears to be no limit on how far it can go if I just change it even more than I did): platinum client # ./boinc 2006-03-11 18:46:55 [---] Starting BOINC client version 5.2.14 for i686-pc-linux-gnu 2006-03-11 18:46:55 [---] libcurl/7.15.2 OpenSSL/0.9.7i zlib/1.2.3 2006-03-11 18:46:55 [---] Data directory: /home/darren/extract/boinc/client 2006-03-11 18:46:55 [---] Processor: 2 GenuineIntel Intel(R) Pentium(R) 4 CPU 3.06GHz 2006-03-11 18:46:55 [---] Memory: 883.68 MB physical, 494.18 MB virtual 2006-03-11 18:46:55 [---] Disk: 13.80 GB total, 7.96 GB free 2006-03-11 18:46:55 [---] No general preferences found - using BOINC defaults 2006-03-11 18:46:55 [---] Remote control not allowed; using loopback address 2006-03-11 18:46:55 [---] Primary listening port was already in use; using alternate listening port 2006-03-11 18:46:55 [---] This computer is not attached to any projects. 2006-03-11 18:46:55 [---] There are several ways to attach to a project: 2006-03-11 18:46:55 [---] 1) Run the BOINC Manager and click Projects. 2006-03-11 18:46:55 [---] 2) (Unix/Mac) Use boinc_cmd --project_attach 2006-03-11 18:46:55 [---] 3) (Unix/Mac) Run this program with the -attach_project command-line option. 2006-03-11 18:46:55 [---] Visit http://boinc.berkeley.edu for more information 2006-03-11 18:46:57 [---] Running CPU benchmarks 2006-03-11 18:47:56 [---] Benchmark results: 2006-03-11 18:47:56 [---] Number of CPUs: 2 2006-03-11 18:47:56 [---] 39714 double precision MIPS (Whetstone) per CPU 2006-03-11 18:47:56 [---] 2310738 integer MIPS (Dhrystone) per CPU 2006-03-11 18:47:56 [---] Finished CPU benchmarks 2006-03-11 18:47:57 [---] Resuming computation and network activity 2006-03-11 18:47:57 [---] request_reschedule_cpus: Resuming activities Notice the benchmarks, and just imagine what kind of credit that would claim. But, my whole point here is that there needs to be some kind of a sanity check put in place by rosetta. If nothing else, a script that runs every day or so that just looks for totally out of whack benchmarks when compared to the cpu they were run on - then adjusts and recalculates the granted credit to those hosts that are too far from reasonable. OK, stepping off of my soapbox now...

|

|

Darren Send message Joined: 6 Oct 05 Posts: 27 Credit: 43,535 RAC: 0 |

...So the problem you have pointed out is a BOINC problem. Well, I only partly agree with that. Having been with boinc since it was in beta testing, boinc addressed this from the very beginning. You have not seen me reccommend redundancy here - and you won't see it - but that is the built in method that boinc uses to prevent people from claiming falsely high credit. It is ROSETTA that chooses not to run their project in a way that allows the boinc software to prevent this as it was intended. The problem that I mention does not occur on other projects - it is unique to rosetta. Boinc released the software as open-source knowing it could be manipulated, then implemented redundancy to negate any benefit anyone would gain from making those manipulations. Again, I don't advocate redundancy here (or on most projects for that matter) - just some simply sanity check to detect falsely high credit claims. ...melodramatic stuff edited out... While clearly a few people are concerned with credit awards to the exclusion of all else, the awarding of credits is not the primary purpose of ANY of the BOINC projects. And it should not be, but neither should it be totally ignored. I really don't care about credits myself. I've participated in projects knowing credit would be lost, such as boinc beta. Yeah, I post my credits in my signature, but they're just numbers - they have no value and I know that. However, they are the only thing that crunchers get in exchange for their participation. And as that, the project should demonstrate some degree of respect for them rather than a "we don't care if people cheat" attitude. The primary purpose of the Olympics is not awarding medals, but the Olympic committees ensure that the ones actually awarded were really earned. ...But frankly, Rosetta is not currently planning to institute redundancy, and I have heard of no plans to develop a new credit system unique to Rosetta. Again, I've never suggested that. The most I've suggested is a script to trigger an automated process of "correcting" credit claims that are clearly abnormal. That position does not constitute hiding from the issue, or ignoring the users, it constitutes fixing the problem at the source. The benchmark system is controlled by BOINC, the credit claims are calculated by BOINC, the ability to hack certain important BOINC files is a BOINC security issue, and the lack of support for non redundant projects is a BOINC problem. Therefore the fix lies with some future version of the BOINC client package, and that is precisly what the project is trying to get. Again, only half credit here. Boinc is designed to operate a certain way, it is Rosetta that does not conform to that standard. Now, I fully hope that boinc is successfully modified to support everything Rosetta wants (and any other project that wants to use boinc), but this adamant "it all boinc and no rosetta" to blame is really astounding. As it is rosetta that is deviating from the boinc norm, rosetta does have some obligation in addressing the issue.

|

|

Darren Send message Joined: 6 Oct 05 Posts: 27 Credit: 43,535 RAC: 0 |

Most people who look at this objectively would agree that redundancy, while a nice patch for a design flaw, is not the best solution for the validation issue if the only purpose for using it is credit granting. If I read you correctly, you see it that way as well. In certain cases redundancy is required to validate the quality of the science, but Rosetta is not one of those cases. While a number of projects do use redundancy, it is a significant waste of resources unless it serves the project. That is my exact take on redundancy. On some projects it serves a scientific need and should exist. On most it doesn't and should not exist. That is why I made it perfectly clear that I do not think rosetta should implement redundancy. Your position that Rosetta has somehow violated the intent of BOINC by requesting improvements to the Client, is just not correct. I don't think I have in any way implied such a position. You have correctly pointed out that BOINC redundancy was instituted to solve known weaknesses in the BOINC credit system. So in truth BOINC is forcing the projects to give up between 1/2 and 3/4 of the available project computing power to fix a problem in the BOINC infrastructure and design concept. But as everyone knows, you're not forced to use the redundancy concept that boinc has integrated. However - and this is a big however - when you decide NOT to use it, you should have a viable alternative in its place. Right or wrong, good or bad - it does serve a purpose. Considering that, it shouldn't simply be discarded unless an alternative is put in its place. Your database is under your control, not Berkeley's. When rosetta decided not to implement the boinc solution at the boinc level, an alternative solution should have been put in place at the rosetta level. Not to say that suggestions (very forceful ones if necessary) shouldn't be presented to Berkeley to improve the boinc program overall, until that happens it's still rosetta that chose no redundancy therefore it's still rosetta's responsibility to have a (hopefully temporary) alternative in place. If we are to expect no changes in BOINC that are driven by the needs of the projects, then all of the projects should conform to the original SETI standard when BOINC was first released. Instead, most people recognize that BOINC itself is actually in a state of development. ... Can anyone honestly say that BOINC should NOT be improved? Is it so perfect in concept and current implementation that change is unnecessary? I'm not a boinc cheerleader - I think boinc (the program, not the concept) still needs a lot of improvement. In my mind, though, that still doesn't equate to a project just ignoring one of the "less-than-desirable" aspects of boinc without somehow compensating for the lost function that is introduced by ignoring that bad aspect (in this case, fair credit). Again, it goes back to right or wrong, good or bad, that less-than-desirable aspect does still serve a purpose. Frankly, the new time feature in Rosetta is a quite elegant and simple solution to a range of problems beyond simple bandwidth reduction that face all BOINC projects. For example how about allowing slower machines to run the projects and still meet the reporting deadlines. While my machine is not overly slow, this one thing was the sole trigger for me deciding to allocate much more of my machine to rosetta. I currently run them for 48 hours, and plan to increase to 96 very soon. I guess I'm a little backwards from most crunchers in that aspect - I prefer long work units over short ones. What Rosetta is asking of BOINC is precisely what the BOINC environment is all about. Flexibility, creativity, science. innovation, and reaching for more. I am sorry you feel that all of this is melodramatic. Well, that was not the melodramatic part of your post. The melodramatic part was the implication that the human race may become extinct if the project were to devote just a bit of attention to fixing a problem its own design caused instead of devoting every minute towards the science, which "is life and death for everyone". Not that the science is not extremely important - I think it is. But I guess having been a paramedic for 23 years, my definition of "life and death for everyone" is a bit different from that of a rosetta scientist.

|

|

Scribe Send message Joined: 2 Nov 05 Posts: 284 Credit: 157,359 RAC: 0 |

Darren said -

but Darren said earlier - ...Again, only half credit here. Boinc is designed to operate a certain way, it is Rosetta that does not conform to that standard........ Which says to me that Darren did imply that Rosetta had violated the intent of Boinc!

|

|

Darren Send message Joined: 6 Oct 05 Posts: 27 Credit: 43,535 RAC: 0 |

Darren said - And what does the rest of that very paragraph say: Again, only half credit here. Boinc is designed to operate a certain way, it is Rosetta that does not conform to that standard. Now, I fully hope that boinc is successfully modified to support everything Rosetta wants (and any other project that wants to use boinc), but this adamant "it all boinc and no rosetta" to blame is really astounding. As it is rosetta that is deviating from the boinc norm, rosetta does have some obligation in addressing the issue. I never said they can't or shouldn't deviate from the boinc norm - just that they are deviating from the norm. But they shouldn't simply discard functionality because they don't like the process that generates that functionality. If they don't like the process, they should implement a local alternative, not simply ignore it. What I said and what you're implying I said are two very different things.

|

|

Darren Send message Joined: 6 Oct 05 Posts: 27 Credit: 43,535 RAC: 0 |

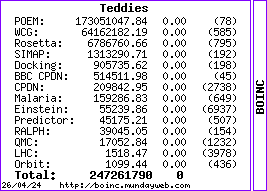

My apologies if I misunderstood your original statement, but I took it the same way "Scribe" took it. And I apologize for not being clear enough. I thought I had made it very clear that I don't like the way boinc addresses the issue (by implementing redundancy) either, but that it does serve a purpose, so an alternative should be used by any project that chooses not to use redundancy. I even said that I thought projects should not use redundancy if not scientifically necessary, so I am kind of baffled how anyone could interpret what I said to mean projects should do exactly as boinc/seti does and are somehow "violating" some concept if they don't. But the idea that they will somehow create some sort of fair "leveling" process and remove credit from people on a wide scale would be more repugnant to many than the present situation. Imagine the outcry from people who have credits reduced, through such a process. I think any outcry would be suprisingly small. Any rosetta participant who also participates in any other project is already fully accustomed to the fact that the amount of credit they ask for and the amount of credit they get can be different. People have complained about the benchmark variations long and loud all the way back to when boinc was still in beta. If rosetta implemented a "leveling" process that corrected totally out of line benchmarks rather than the normal throw-out-the-high-and-low-claim leveling process other projects now use, I think rosetta would be considered a hero, not a villain. Moreover this is not as trivial a process as you imply. There would have to be testing, benchmarking, code preparation, and the increase load on the servers would impact the operation of the project. My original suggestion of averaging is not so complex. It would require running against the database, which is why I suggested it be run once per day or so. If, for example, a script extracted the benchmarks of all the p4 2.8 gh hosts then averaged those results, you now have a starting point. The project then determines how much a benchmark can reasonably and legitimately vary from the average of all similar systems - then applies that as the maximum and minimum for that cpu. This would have to be done for each type and speed of cpu, but it would only have to be done once. And one script (ok, it has to be written, but it's not a complex script) running one time could get all the averages and determine the high and low variation tolerance numbers. Those numbers are then used to write another quite simple script to run at the project defined intervals and look for benchmarks that are out of range. If it finds any - too high or too low - it recalculates the credit since its last run using the project-defined maximum or minimum benchmark for that cpu. For that matter, granting of credit could even be held until after the script has ok'd the benchmarks - it's not like people aren't already used to waiting days for credit to be issued anyway on projects that use redundancy. Of course, any process that defines an acceptable range doesn't totally eliminate the ability to manipulate, but it does cap just how far any manipulation can take you. There are over 40,000 users attached to the project, less than 100 individual people have raised this issue, and some of those raise it often and loudly. When it is raised the project looks at the problem and takes action if it is appropriate, but the forums give a false impression of the actual size of the problem. If people are cheating it stands to reason that they would appear among the top individual systems. But even that is not easy to say when a high RAC number can be created by infrequent results uploads, and high credit claims can be made by identical systems that for a number of reasons have different but legitimate benchmarks. I learned long ago not to form opinions based on forum rants. The only reason I joined in this discussion here is because I can look at the list of top computers myself and see for myself what I'm talking about. At the time that I'm writing this, the top computer has the following details: CPU type GenuineIntel Intel(R) Pentium(R) 4 CPU 2.80GHz Number of CPUs 2 Operating System Microsoft Windows XP Professional Edition, Service Pack 2, (05.01.2600.00) Memory 502.98 MB Cache 976.56 KB Measured floating point speed 6346.35 million ops/sec Measured integer speed 13034.85 million ops/sec Now, as you know I'm no computer expert, but even I know that there is no legitimate way the benchmarking method boinc uses could produce those benchmarks on a p4 2.8gh system. Not that I'm saying this person did anything to intentionally cheat (boinc itself with no user intervention has done weirder things all by itself before), but the benchmarks are still clearly wrong. If a script ran every day or so and adjusted those to an acceptable maximum, they may still be wrong - but not as wrong. Who could complain about that? The legitimate user with totally out of whack benchmarks will not care that they were corrected. I see no way anyone could complain about a fair crediting system unless they're not playing fair themselves. The answer to this problem is the proposed flops counting system being incorporated into BOINC right now, not some arbitrary credit value assigned to each work unit and imposed by a single project. That would be no different that simply saying one work unit equals one credit. The only way to get the flops system in place, is to work with the BOINC team to do it, and the only way to get that done is to demonstrate a real world need on one of the projects to have it. That is precisely what Rosetta has done, and is doing. I give rosetta full credit on that count. However, I also know from being a seti beta tester that there are some current flaws with that concept that are just as big as the existing flaws. Granted, it's still not ready for general release so there is some time to work some of that out. As has been pointed out over there though, it doesn't seem to really be fixing the problem - it just buries it a little deeper and makes it even harder to find. Anyway, I think all of my views here are pretty well known. Not than anyone listens or cares, but at least I feel better having screamed about it for a while. So, I'll just slip back into obscurity now.

|

|

Scribe Send message Joined: 2 Nov 05 Posts: 284 Credit: 157,359 RAC: 0 |

I have to agree with Darren here.....those who shout the loudest often have something to hide......others like like me would not object to the 'cheats' being levelled off! |

Los Alcoholicos~La Muis Los Alcoholicos~La MuisSend message Joined: 4 Nov 05 Posts: 34 Credit: 1,041,724 RAC: 0 |

My original suggestion of averaging is not so complex. It would require running against the database, which is why I suggested it be run once per day or so. If, for example, a script extracted the benchmarks of all the p4 2.8 gh hosts then averaged those results, you now have a starting point. Unfortunaly Boinc isn't able to identify the right processor and processor speed. As long as that is the case such a leveling will unleash a lot of indignation and discusion. In my case Boinc misreport a Intel Celeron Tualatin 1,4 GHz as a GenuineIntel Pentium(r) II Processor. A PowerPC G4 2 GHz upgrade isn't recognized and it is still reported as a PowerPC3,1 and I know of OCed processors which run over 40% of the stockspeed without Boinc being able to report the right speed. Although I should feel a lot comfortable with a better benchmark/crediting system, the proposed "leveling" system for sure isn't the solution because of the false starting point. |

Message boards :

Number crunching :

Result was reported too late to validate ????????????

©2026 University of Washington

https://www.bakerlab.org