too many WUs downloaded

Message boards : Number crunching : too many WUs downloaded

| Author | Message |

|---|---|

|

mikus Send message Joined: 7 Nov 05 Posts: 58 Credit: 700,115 RAC: 0 |

I run off-line and connect (dial-up) once or twice a week. On last Thursday's connect, the project spent NINE HOURS downloading work to me. Just looked at my ready queue, and multiplying the number of WUs still to be processed (112) by the recent average time each takes (4+ hours), I figure it will be __18__ days to finish all these downloaded WUs. However, they are ALL due in THREE days !!! I thought BOINC was supposed to have a better "work scheduler" than that. [I suspect BOINC looked at the 'ready' queue and "ordered" <xx> WUs; then some minutes later BOINC looked again at the ready queue and "ordered" <yy> WUs, but did not realize that because of my slow connection the earlier <xx> WUs had __NOT__ yet been downloaded -- meaning the extra <yy> WUs were in fact NOT NEEDED. Instead, after some minutes BOINC again looked at the ready queue and ordered yet more <zz> WUs -- continuing in this behavior (ordering even more WUs) on and on and on !!] Apparently, when the "time to expire" of WUs was 28 days, a FLOOD of WUs downloaded to my system could be worked off normally. With the 7-day "time to expire" WUs, I'll probably get OODLES of 'client errors' attributed to me !! . |

|

Astro Send message Joined: 2 Oct 05 Posts: 987 Credit: 500,253 RAC: 0 |

boinc requests work in seconds needed to fill requested queue. This is determined by what the projects tell boinc the estimated run time will be. If the estimate is 2 hours but really takes 8 then boinc has no way of knowing that. Boinc does have a built in DCF (duration correction factor). It learns the time it takes and does a percentage adjustment to the estimate and applies that. The downfall is that WUs have to be done to boinc can get the figure. the real problem here is that the project is telling boinc the wrong info. tony Basically, boinc is requesting the proper amount to fill the need, but the project is delivering the wrong quantity. |

|

mikus Send message Joined: 7 Nov 05 Posts: 58 Credit: 700,115 RAC: 0 |

boinc requests work in seconds needed to fill requested queue. This is determined by what the projects tell boinc the estimated run time will be. If the estimate is 2 hours but really takes 8 then boinc has no way of knowing that. Boinc does have a built in DCF (duration correction factor). It learns the time it takes and does a percentage adjustment to the estimate and applies that. The downfall is that WUs have to be done to boinc can get the figure. True. I did not want to clutter up my post with numbers (and I don't have good records) but the client *did* request work in seconds, and KEPT ON REQUESTING (at intervals of four minutes) work in seconds. I don't have the initial request, but early on the client was requesting 299837 seconds of work. Four minutes later it was requesting 279763 seconds of work. Four minutes later it was requesting 259869 seconds of work. Requests were repeated every four minutes. By an hour after the 299837 request, the current request sent by the client was still for 143067 seconds of work. Eventually, the server realized there was an overcommitment and said 'no more'. the real problem here is that the project is telling boinc the wrong info. I disagree. There may or may not be a question of whether the request for 299837 seconds of work was ACCURATE, but that's about 3.5 days. The __REAL__ problem was that the WUs downloaded amounted to 24 days or so of work. The estimated WU run time would have had to be around 2200 seconds in order for all the downloaded WUs to fit into 299837 seconds. But the average WU on my system has been taking 1.5 hours or more (and the server HAS that history). I *don't* think the project would have been that far off. In my opinion, the problem is the BOINC client asking for work (<xx> seconds) and AGAIN asking for work (<yy> seconds) and AGAIN asking ... . |

|

AMD_is_logical Send message Joined: 20 Dec 05 Posts: 299 Credit: 31,460,681 RAC: 0 |

One problem is that just before the update there were many WUs that crunched very fast, some only 20 minutes or so. Now all WUs take 8 hours, but the BOINC client needs a little time to figure that out. Until it does, it will think that all WUs crunch fast and thus many are needed to keep the computer busy. If all WUs request the same amount of time, and crunch in the same amount of time, BOINC should be able to adjust to accurately display the time needed to crunch a WU and also to load the correct number of WUs for a given cache setting. |

|

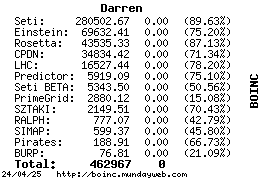

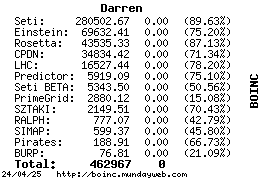

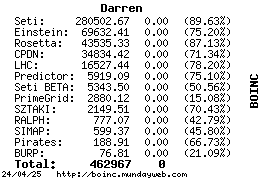

Darren Send message Joined: 6 Oct 05 Posts: 27 Credit: 43,535 RAC: 0 |

I've seen the same thing many times and can vouch that it does happen. It's never been a problem for me, as I have a very small cache so I've always been able to do the work long before it expires. I do agree that it looks like a boinc issue, not a project issue. It seems that on downloads that are moving particularly slow, boinc arrives at a new "work request" point while work is still downloading from the last request. The problem is that boinc does not seem to include the time it will take to process the work that is not yet downloaded, but instead only considers what is "ready to run" when it decides how much more to ask for. The net result is that anything still waiting to be downloaded gets that much time requested again on the new request. Each new request does get successively smaller as more has downloaded since the last one, but everything stays in the queue to download - so once it all finally gets there, you can have a huge amount more work that what you should have gotten. I've noticed this both on rosetta and einstein, where it can take a while to get the large files. I've never seen it happen on projects with small files, as they're always "ready to run" before a new request would be made.

|

|

Astro Send message Joined: 2 Oct 05 Posts: 987 Credit: 500,253 RAC: 0 |

Mikus, the new 4.82 is a change. You now have in the "rosetta preferences" section of "general preferences" under "your account", a setting called "Max CPU run time". This was set by default to 8 when they introduced it. You can change it to 2 hours and all your work will be done in time. tony Dr Kim cautioned user about just your situation somewhere around here.   |

|

mikus Send message Joined: 7 Nov 05 Posts: 58 Credit: 700,115 RAC: 0 |

Mikus, the new 4.82 is a change. You now have in the "rosetta preferences" section of "general preferences" under "your account", a setting called "Max CPU run time". This was set by default to 8 when they introduced it. You can change it to 2 hours and all your work will be done in time. Here we get into a pet peeve of mine. Looking at the words "Max CPU run time" I have not the __SLIGHTEST__ idea of what those words are supposed to mean. My CPU runs 24/7. [It's a multiprocessor. Rosetta is its only project using BOINC, but I do other work on the system as well.] The system is offline except for once or twice a week. So I *do* need to have downloaded 168 hours of Rosetta work, in case I don't connect again for a week. Are you saying that changing this ?undocumented? value to '2' will prevent those extra 18 days of work currently queued up in my system from timing out? . |

|

Darren Send message Joined: 6 Oct 05 Posts: 27 Credit: 43,535 RAC: 0 |

Are you saying that changing this ?undocumented? value to '2' will prevent those extra 18 days of work currently queued up in my system from timing out? Yeah, that's pretty much what he's saying. If you're interested in learning more about how it works, you can jump over to the alpha forums and read up on it there, but in short that number tells rosetta how long you want it to process each work unit. It will then, as much as possible, aim for processing the work unit only that far - remember it has to complete its current cycle, so it won't be exact. If you reduce it to 2 hours, each work unit will then run approximately 2 hours, then finish for that one and move on to the next. With your connect schedule, once you get these cleared out it will benefit you greatly if you increase the time way up. Then you can download 1 work unit and run it for 4 days, or download 2 and run each for 2 days, etc. That will greatly reduce your downloads when you are connected.

|

|

Astro Send message Joined: 2 Oct 05 Posts: 987 Credit: 500,253 RAC: 0 |

Someone correct me if I'm wrong, I'm a Boinc alpha tester and know little about Rosetta. I think the rosetta wus consist of stages/Models/strings (seem to have same meaning) the number of strings run is dependent on the system (your systems') speed and CPU time setting. For example, My Celeron does a string in 40 minutes or so, If I set it to run 2 hours then I can complete 3 models/strings/stages/cycles. If I set it to 4 hours then I can do 6. etc etc. I've seen one Ralph member make it to 313 models on the same WU. (I wonder if there's actually an end model number???) Anyway, Boinc was expecting the 2hour wu, but got the 8 hour for your puter. If you do nothing, Boinc will adjust the estimated time to around the 8 hour timeframe and go into EDF mode. As you say, most likely you won't finish them all by the deadline. If you set it back to 4 hours, then keep an eye on it and if you see you won't finish, then change it to 2 hours, OR just set it to two and do them all on time. hope this helps tony |

|

mikus Send message Joined: 7 Nov 05 Posts: 58 Credit: 700,115 RAC: 0 |

Mikus, the new 4.82 is a change. You now have in the "rosetta preferences" section of "general preferences" under "your account", a setting called "Max CPU run time". This was set by default to 8 when they introduced it. I'm currently running 4.80. The way I got it: I was running 4.79, and the server automatically downloaded 4.80 to me. How does one switch to 4.82 ? mikus -------- p.s. I don't care about credits. Would it be useful to 'Ralph' for me to join? The machine I'm using for Rosetta is an Athlon MP running SuSE 9.0. . |

|

Darren Send message Joined: 6 Oct 05 Posts: 27 Credit: 43,535 RAC: 0 |

Mikus, the new 4.82 is a change. You now have in the "rosetta preferences" section of "general preferences" under "your account", a setting called "Max CPU run time". This was set by default to 8 when they introduced it. Currently 4.80 should only be a windows version. If you have the current linux version, it should be 4.81. As you saw, the upgrades are automatic except on projects that supports the anonymous platform, where you have to manually upgrade only that project when a new app comes out. RALPH still has a front-page request for more linux and mac participants, but I don't know how urgent that need is at the moment. They're currently not sending out any work, but you can always sign up and be ready when some does come out. The only thing that may present a problem for you is that some of the ralph work has very short deadlines, since it's just for testing. If you only connect every few days out of necessity, it may cause some problems on the deadlines.

|

|

mikus Send message Joined: 7 Nov 05 Posts: 58 Credit: 700,115 RAC: 0 |

Well, the server yesterday marked all the WUs still queued up in my client as 'over' (because yesterday was when their 7 days expired). BUT, the server *did* give me credit for the WUs which I processed between yesterday and today, and did *NOT* download any "new" WUs to my system. As long as the server continues to accept "expired" WUs from me, I'll continue with processing them (and will not delete them manually from the queue in my system). mikus -------- p.s. I'm running Linux, and am still on 4.80. The project has NOT seen fit to download 4.81 to me -- perhaps because my system is still overcommitted by that queue of (now-expired) WUs. . |

|

mikus Send message Joined: 7 Nov 05 Posts: 58 Credit: 700,115 RAC: 0 |

See if this post helps you at all. As I said, my system is currently running (Linux) Rosetta 4.80. Does the new "CPU Time" parameter have any affect with the application version I currently have ? . |

reddwarf reddwarfSend message Joined: 5 Nov 05 Posts: 11 Credit: 228,026 RAC: 0 |

I am running 1 box with Linux and version 4.81. My CPU time pref is default (8 hours), and the WU's are running for 8hours (approx). The same is true for my Windows boxes, running v4.82 |

David E K David E KVolunteer moderator Project administrator Project developer Project scientist Send message Joined: 1 Jul 05 Posts: 1480 Credit: 4,334,829 RAC: 0 |

The only difference between the linux and the other versions is that there is no graphics for linux yet. |

|

mikus Send message Joined: 7 Nov 05 Posts: 58 Credit: 700,115 RAC: 0 |

I uploaded the six (expired by the server, but still queued in my client) WUs that my system processed between yesterday and today. I received credit for three of them. The other three had been re-issued by the server to other people, who had finished them before me. I guess the server "recognizes" whoever first submits a completed result. In consequence, I am now manually ABORTING the 80-odd "leftover" WUs that the BOINC client downloaded to my system, that I did NOT get a chance to finish before their time-to-expire. . |

|

mikus Send message Joined: 7 Nov 05 Posts: 58 Credit: 700,115 RAC: 0 |

How these applications work out in practice is largely a mystery to me. The only difference between the linux and the other versions is that there is no graphics for linux yet. All this time I was running with (Linux) 4.80 (which did *not* support "Target CPU run time"). After ABORTING the past-expiration WUs, I had to wait until __ALL__ work queued at my system (including one stray WU with a complete-by date in March) had been processed by my system AND had been reported to the server -- only THEN did the project start downloading any new work (or 4.81 iteslf) to me !! [To avoid another "flood" of WUs, as soon as the request (for 256200 seconds of work - that's what I currently have specified in my General preferences) was sent to the server, I *manually* clicked in BOINCmanager for: "No more work from Rosetta".] I'm now watching 9 WUs being downloaded (preceded by 4.81). [That would be correct if each ran for 8 hours. But earlier than today I had set my "Target CPU run time" value in the Rosetta preferences to 10 hours. Oh, well! Just another example of unexpected behavior, to keep in mind.] I __hope__ I caught the client in time, and NO MORE seconds of work will be requested today. It is taking *effort* to juggle the application's actions to avoid another "too many WUs downloaded" situation. -------- p.s. I was right in what I feared with my initial post to this thread -- I __did__ get OODLES of 'client errors' attributed to me (for all those "too many WUs downloaded" that I then had to manually ABORT after they had expired). . |

|

Terminal* Send message Joined: 23 Nov 05 Posts: 6 Credit: 7,845,878 RAC: 0 |

so isnt it bad for that unit of work if u tell it to finish in 2 hours? wouldnt it be more thurough(SP) if it went for as long as it can...like seti...when it finish's..it finish's, this..you can actually tell it WHEN to finish? whats the point in that..shouldnt it just run till its finished? |

|

BennyRop Send message Joined: 17 Dec 05 Posts: 555 Credit: 140,800 RAC: 0 |

shouldnt it just run till its finished? Regardless of the max cpu time setting, it'll always finish at least one model; even if it's a large WU and takes 10 hours on your machine and your max cpu time is set to less than that time period.) The WU will get passed out until the project gets its 10,000 or so models back. The larger the max cpu time setting, the less frequent communications with the project servers, and the less likely the system will be overwhelmed with communications requests such as happened when we were handed out lots of 15 min WUs and we became a distributed denial of service attack on the project servers. |

|

mikus Send message Joined: 7 Nov 05 Posts: 58 Credit: 700,115 RAC: 0 |

The larger the max cpu time setting, the less frequent communications with the project servers, and the less likely the system will be overwhelmed with communications requests such as happened when we were handed out lots of 15 min WUs and we became a distributed denial of service attack on the project servers. I have no argument with what you are saying. Yes, alowing longer-running WUs *does* mean that participants get to download from the server less frequently. But it will take a client change to overcome the problem for which this thread was originally opened -- when downloads are SLOW, the current client can __re-request__ (more) work before the download of the *earlier-requested* work has completed. In that case, the second request (plus any follow-on requests) results in TOO MANY WUs DOWNLOADED (no matter what the preferences settings are). . |

Message boards :

Number crunching :

too many WUs downloaded

©2026 University of Washington

https://www.bakerlab.org