Way too much credit?

Message boards : Number crunching : Way too much credit?

| Author | Message |

|---|---|

Jeff JeffSend message Joined: 21 Sep 05 Posts: 20 Credit: 380,889 RAC: 0 |

Take a look at these rigs that are pulling in close to 1000pts/day... http://www.boincstats.com/stats/host_graph.php?pr=rosetta&id=1197 http://www.boincstats.com/stats/host_graph.php?pr=rosetta&id=1198 http://www.boincstats.com/stats/host_graph.php?pr=rosetta&id=1200 http://www.boincstats.com/stats/host_graph.php?pr=rosetta&id=1201 http://www.boincstats.com/stats/host_graph.php?pr=rosetta&id=1202 Now take a look at their benchmarks and then continue on to the results... https://boinc.bakerlab.org/rosetta/show_host_detail.php?hostid=1197 https://boinc.bakerlab.org/rosetta/show_host_detail.php?hostid=1198 https://boinc.bakerlab.org/rosetta/show_host_detail.php?hostid=1200 https://boinc.bakerlab.org/rosetta/show_host_detail.php?hostid=1201 https://boinc.bakerlab.org/rosetta/show_host_detail.php?hostid=1202 This person must know how to "optimize" pretty darn well considering his rigs are getting ~1000/day. But if you look at the benchmarks and then how long each unit takes something doesn't add up to me. If someone can explain how he can benchmark head and shoulders above me and then have his rigs take *MORE* time and get *WAY MORE* credit... I'd appreciate it. :( Here is one of my AMD 3800x2 which is running at 2.56GHz... http://www.boincstats.com/stats/host_graph.php?pr=rosetta&id=1761 https://boinc.bakerlab.org/rosetta/show_host_detail.php?hostid=1761 See how my benchmark is way below his yet my rig finishes in close to 1/2 as much time? What am I missing? (besides the massive credit this other person seems to be getting) Jeff's Computer Farm |

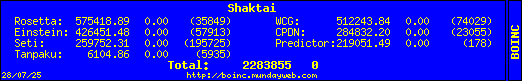

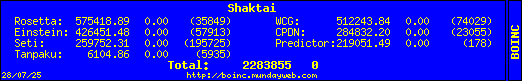

Shaktai ShaktaiSend message Joined: 21 Sep 05 Posts: 56 Credit: 575,419 RAC: 0 |

Well, just looking at it, I would say that the person benchmarked with HT off, and then turned HT back on but didn't re-benchmark. Their P4 3.4ghz are more a bit more then double what my P4 3.4ghz benchmarks with HT on, that is why I am guessing that. Might be intentional or might be accidental. They are also probably using an optimized BOINC client. Looking at their highs and lows, it looks like they may be running more then one project. Some of their high days are the result of not turning anything in the day before. If you look at their RAC though, they are below you. Still, it is a bit odd, and the benchmarks are inflated by quite a bit. Your 3800 seems to be running about where it should compared to my 4200, so you are not under benchmarking. BOINC benchmarking is not ideal at present. Personally, for a project that doesn't require validating work units (to equalize the scoring) I think Rosetta should just reward a set number of points per work unit as climate prediction does. That would put everyone on equal ground, and the BOINC benchmarks couldn't be used to mess with scoring. On projects with multiple validating units, it tends to even things out, because the heavily optimized clients never really get their claimed credit. Of course, that means I might get fewer points too, but at least we would all be on equal ground.  Team MacNN - The best Macintosh team ever. |

Jeff JeffSend message Joined: 21 Sep 05 Posts: 20 Credit: 380,889 RAC: 0 |

Well, just looking at it, I would say that the person benchmarked with HT off, and then turned HT back on but didn't re-benchmark. Their P4 3.4ghz are more a bit more then double what my P4 3.4ghz benchmarks with HT on, that is why I am guessing that. Thanks for the response. It's funny that you would respond because I used your rigs to confirm what I was thinking to myself since we both seem to be running about the same RAC. ;o) I completely agree with the benchmark not being ideal atm. What is to stop someone from manually benchmarking with a very high overclock then backing off and running for the next 5 or so days? I mean, I can overclock alot higher than what I'm running now but it's not 100% stable. If I added a little cold air to my setup I'm sure I could turn up my clock for the 45 seconds it takes to benchmark. Then I could go back down to my stable settings and grab some free points. Hell... I used to be an avid benchmarker with three nVentiv Mach 1 phase-change coolers running. If I still had one I could probably pump my 3800x2 up to 2.9-3.0GHz for the bench and grab 1500pts/day. :oD I like your idea of a set credit per work unit. Makes for a little more consistant point distribution in my opinion. Jeff's Computer Farm |

Webmaster Yoda Webmaster YodaSend message Joined: 17 Sep 05 Posts: 161 Credit: 162,253 RAC: 0 |

Their P4 3.4ghz are more a bit more then double what my P4 3.4ghz benchmarks with HT on And your P4 3.4GHz benchmarks are 1.5 times mine with HT on. I'm curious how your benchmarks can be so much faster than mine (what's wrong with MY system) Personally, for a project that doesn't require validating work units (to equalize the scoring) I think Rosetta should just reward a set number of points per work unit as climate prediction does. The only thing is... How many credits? With CPDN each trickle takes roughly the same amount of processing time on the same computer with the same settings. With Rosetta, the time taken to process a work unit varies a lot, depending on which protein we're working on. Some work units take more than twice as long as others, on the same host. Would it be fair to get the same amount of credit for a 2 hour work unit as for a 5 hour work unit, on the same host? I expect that many "credit junkies" will abort the longer WUs, or leave. Unless the project could allocate different amounts of credit for different types of work units (as CPDN do with Sulphur runs), I don't think this would be a solution. But even if it can be done, I expect it to be a lot of work for the project team - time better spent on more important issues. *** Join BOINC@Australia today *** |

Shaktai ShaktaiSend message Joined: 21 Sep 05 Posts: 56 Credit: 575,419 RAC: 0 |

Their P4 3.4ghz are more a bit more then double what my P4 3.4ghz benchmarks with HT on Hmmm! Good point. Gonna have to give it a lot more thought. Guess nothing comes easy. Probably isn't a perfectly fair system because of all the variances in system, and operating systems, but should be some type of a reasonable compromise. Don't know what it would be. Every idea so far appears to be more flawed then even the original BOINC system.  Team MacNN - The best Macintosh team ever. |

|

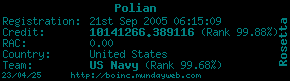

Polian Send message Joined: 21 Sep 05 Posts: 152 Credit: 10,141,266 RAC: 0 |

And your P4 3.4GHz benchmarks are 1.5 times mine with HT on. I'm curious how your benchmarks can be so much faster than mine (what's wrong with MY system) I would start by comparing the operating system, background processes running while benchmarks ran, memory type, memory timings, and chipset used between the two platforms... and if the 3.4 GHz chips are the same core or not.

|

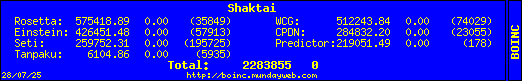

Shaktai ShaktaiSend message Joined: 21 Sep 05 Posts: 56 Credit: 575,419 RAC: 0 |

Their P4 3.4ghz are more a bit more then double what my P4 3.4ghz benchmarks with HT on I am running the 4.72 beta client, because it seems to have fewer errors and less frequent 1% freezes on my dual core processors. Mine Measured floating point speed 2188.18 million ops/sec Measured integer speed 2641.87 million ops/sec Yours Measured floating point speed 1767.55 million ops/sec Measured integer speed 2384.02 million ops/sec Actually it looks like I am about 16% faster, not 50%, unless my math is all messed up. Could you have had some kind of background process running when the benchmarks ran? (I know, probably a silly question, but the only one I can think of at the moment.) My box is a fully dedicated cruncher with a Pentium 4 550, 1meg L2 cache, no overclocking. Just a stock Gateway box. Mobo is Intel, 512 mb PC 3200 RAM. Windows XP Home Edition. Only other things running are Trend Micro PC-cillan Internet Security and Spyware doctor. CPU-Z shows it running at 3400.1. Pretty much right on.  Team MacNN - The best Macintosh team ever. |

Webmaster Yoda Webmaster YodaSend message Joined: 17 Sep 05 Posts: 161 Credit: 162,253 RAC: 0 |

Actually it looks like I am about 16% faster, not 50%, unless my math is all messed up. It may have been running a few extras when the previous benchmark ran, so I did a manual benchmark (after stopping Rosetta). Figures are now a bit better indeed - they used to be 1468 and 1797. Hardware specs for yours and mine appear to be close as mine is also a P4 550, on Intel (D915GAV) mobo, 1GB PC3200 RAM. I guess one difference is I run two AV programs on it and it's doing more than just crunching (currently running as web server, mail server as well as being my regular PC). That should change by the end of the month when I expect to get a proper server (dual Xeon). And yes, it will crunch too :-) *** Join BOINC@Australia today *** |

Paul D. Buck Paul D. BuckSend message Joined: 17 Sep 05 Posts: 815 Credit: 1,812,737 RAC: 0 |

We have extensivly discussed the benchmarking in the SETI@Home NC forum. The "best" proposal I know of (disclaimer, it was me that proposed it) would rely on some effort to classify the processing requirement in a more exact way then using these measurements and some other "trickery" eventually "calibrate" all the systems so that the variances would be lower. This may happen, or it may not. At THIS time I do not have the ability to pursue this on my own. BUt, the current need is to take the SETI@Home science application, put in counters to capture the number of loops, run that out to how many operations that really means (instead of a very big WAG we use now) for the "Reference" work unit. Knowing this to a higher degree of accuracy, I can now run this work unit on several computers. Those computers run times can now be accurately pegged to a level of performance. The most accurate benchmark of a computer is the actual work. Now, the argument AGAINST this is that the SETI@Home work unit is not a project independent measurement of performance. I agree. The benchmarks are even more project independent in that they represent NO current BOINC project at all. Oh, and they are not very stable. Yet, the work units are. And, the PROPORTION of time for projects are "reasonable", one of my computers that takes 4 hours for SETI@Home and 2 for Predictor@Home is twice as fast as one that takes 8 and 4 for example. And THOSE proportions "hold up" as a good metric. SO, maybe using SETI@Home as a metric to start is not so bad after all. From there, projects willing, we can do the same. So, how does that benefit us. Well, let us say that we have "calibrated" one of my computers. I am in a "Quorum of Results", I took 1,000 seconds to perform the work. You took 2,000 seconds, your machine is 1/2 the speed of mine. Our claims SHOUD BE THE SAME. If they are not, we can fix that. Bottom line, this is all "fixable", but, the priority is low, and perhaps rightly so. I would argue that with BOINC doing as well as it is, that it is probably in our interest to invest the time now. But, I don't run things. But, with the strength of the communities interest perhaps it should be of higher priority. In theory, the "averaging" and Tossing high/low and so forth evens out the inequities, but, we do have that sense that it does not. Last point, CPDN and Rosetta@Home can use a different method, which the calibration really is an attempt to more precicely pinpoint, is to use the CS per second. Just give a fixed rate of credit per calculation second. You take longer per work unit, you get the same reward I do for the same unit time. Inflated benchmarks no longer play in that they are not used to inflate expected but not received performance. |

Message boards :

Number crunching :

Way too much credit?

©2025 University of Washington

https://www.bakerlab.org