unnecessary earliest-deadline-first scheduling

Message boards : Number crunching : unnecessary earliest-deadline-first scheduling

| Author | Message |

|---|---|

|

mikus Send message Joined: 7 Nov 05 Posts: 58 Credit: 700,115 RAC: 0 |

Rosetta is the only BOINC project on this system, which runs off-line (except for occasional connects). My preferences specify the CPU time per WU as 10 hours, and the interval between connects as 6 days. On Mar 12 I did a download to "refresh" the queue of ready WUs at my system. When the currently-running WU completed, the next WU failed in less than one minute with code 1 (its problem is known, and has been solved by now). Two WUs then completed normally; the next WU after that again got code 1 (same problem). HOWEVER, after the 'finished' message for this failed WU, there now was the message "Resuming round-robin CPU scheduling". There had been *no* previous messages about scheduling methods. The next WU completed normally. HOWEVER, __eight hours__ into the processing of the WU after that (the WU eventually completed normally), there was the message "Using earliest-deadline-first scheduling because computer is overcommitted". Note that there were about 100 hours of work in the queue, with the earliest expiration-deadline about 10 days away. Plus the system had been processing 10 hour CPU time WUs for weeks now. There was NO REASON for the client to say the computer was overcommitted. <Today I was again able to do a download to "refresh" the queue of ready WUs at the system, *despite* the last (spurious?) scheduling method message having told me the computer was 'overcommitted'.> . |

|

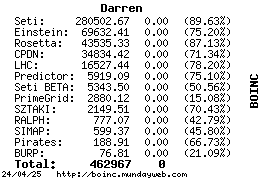

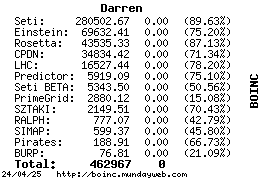

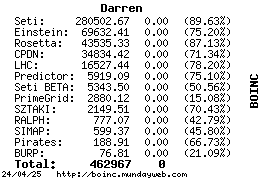

Darren Send message Joined: 6 Oct 05 Posts: 27 Credit: 43,535 RAC: 0 |

Rosetta is the only BOINC project on this system, which runs off-line (except for occasional connects). My preferences specify the CPU time per WU as 10 hours, and the interval between connects as 6 days. This is just one of those weird little oddities that boinc has. Keep in mind that when you give it a "connect every" setting, it assumes that it will actually be restricted exactly to that time frame. In your case, with a 6 day connect setting, once boinc has gone a couple days into the queue, if it is allowed to actually reconnect again it will determine that it must finish everything that is due in less than about 10 days before the next connection that will occur in 6 more days days - because it assumes that after the next connection it won't be allowed to connect again until 6 days later (and after the expiration of some of the work units). I see from your results that 2 of the work units downloaded on the 12th were reported on the 14th. At that point, boinc assumes 6 days till the next connection (20th)- then 6 days after that for another (26th). Since that connection was made after the time of day for the workunits due on the 26th, boinc would assume that all work units due on the 26th would now have to be finished before the 20th - otherwise the connection (based on a strict 6 day connect interval) on the 26th would have occured about an hour too late to report those work units due on the 26th. The message will have no real-world effect with the host only attached to one project as everything will keep processing just like it normally would have anyway.

|

|

mikus Send message Joined: 7 Nov 05 Posts: 58 Credit: 700,115 RAC: 0 |

Thanks for telling me. (Minor point: the client's EDF calculation <on the 14th> was done while running offline - hours before the connection occurred.) But the client's arithmetic was WRONG !! It ran its EDF calculation on the morning of Mar 14. There were about 100 hours of work to do on its queue. It __would__ have finished before the 20th !! . |

|

mikus Send message Joined: 7 Nov 05 Posts: 58 Credit: 700,115 RAC: 0 |

This can happen if some number of the workunits exceed the expected runtime. For example if a number of 10 hour WUs actually run for 10:45, your system would begin to realize that they might all run that way, and you would then possibly be over committed at the early part of the queue. But as the queue runs, this would change. What changed in this case was a few WUs crashed and reduced the workload in the queue. You did not catch what I was complaining about: - My reporting interval was specified as 6 days - The queue at the client held ONLY about 100 hours of work (14-day WUs) So far, *every* WU (before or after) has run very close to 10 hours. I think "exceeding the expected runtime" does not apply here (unless crashing 1-minute WUs *increase* the "history" value?). On another thread, it was said: 'if a project deadline is less than double the cache size, the box will run in EDF mode'. That seems to be what was happening here - blind adherence to that rule. Perhaps people who prefer a sizeable cache size are not suitable for Rosetta's 14-day WUs. . |

|

Darren Send message Joined: 6 Oct 05 Posts: 27 Credit: 43,535 RAC: 0 |

On another thread, it was said: 'if a project deadline is less than double the cache size, the box will run in EDF mode'. That is correct, and that is what I though had happened in your case as the manual connect you made 2 days after the prior connection would have "reset" the time frame for determining when the 6 day connection setting would apply - basically making boinc think it had to fit 6 + 6 (for 2 normal connections) days worth of work into 2 + 6 (the manual connection plus the next normal connection) days on the calendar. Since it occurred before that manual connection, it's something a bit more complex than that, though. Working like it should, if the due date isn't at least 2 normal connection times away, it will always think it has to do all the work before the next connection - thus the "deadline must be at least double the connection time (or cache size, if you prefer)" guidelines. And yes the crashed WUs would affect a change in the expected run time but it should make it shorter In the case of a 1 minute crashed work unit, the change would be very small - but enough of them followed by a quick reconnect before it adjusts back up could cause an extra work unit to download. Just FYI for anyone who's interested, any work unit that runs less than 10% of the estimated run time only adjusts the estimated run time of the remaining work units by 1% of the run time difference. If the normal run time is 10 hours (600 minutes), a work unit that crashes in 1 minute would only reduce the estimated run time by 6 minutes (5.99 minutes to be exact) as the difference in what it expected and what happened was 599 minutes and the total run time was less than 10% of the expected run time. If the run time is between 10% and 100% of the expected run time, the dcf adjusts the run time by 50% of the difference. So if a 600 minute work unit for some reason only ran 300 minutes, it would adjust the estimated run time down 150 minutes (50% of the 300 minute difference between what it expected and what happened). In short, all that just means that a work unit that crashes quickly will have only a absolute minimal effect. One that runs for a reasonable time then crashes would have a much more dramatic effect. This 10% factor was put in specifically so corrupt work units wouldn't cause a drastic change in estimated run times for the remaining work units.

|

|

mikus Send message Joined: 7 Nov 05 Posts: 58 Credit: 700,115 RAC: 0 |

On another thread, it was said: 'if a project deadline is less than double the cache size, the box will run in EDF mode'. I *am* agreeing to what you've said -- but did you note my "minor correction"? There was a download Mar 8. The next download was on Mar 12 - it would have "reset" the time frame. By Mar 13, all WUs downloaded Mar 8 had finished, and only WUs downloaded Mar 12 were left. On Mar 14 (__BEFORE__ the Mar 14 connection was ever made) the hour (when 'twice 6 days' was added) got larger than the Mar 26 deadline, and the client apparently decided that the computer was overcommitted. At the time this decision by the client was made, the most recent "connection which reset the time frame" was that of Mar 12, __NOT__ that of Mar 14 (which was still to come - some hours later on Mar 14). <I manually initiated the Mar 14 connection upon seeing the "switching to EDF mode" message already there in the BOINC window - I wished to have all 'results to date' uploaded to the server before I started posting about them. And I wondered that (without ever issuing any message about ending EDF mode) the client proceeded to *request* (once network connection was allowed via the boinc manager) for yet more work to be downloaded!> . |

|

mikus Send message Joined: 7 Nov 05 Posts: 58 Credit: 700,115 RAC: 0 |

Well I guess I am having some trouble understanding your situation. If your queue is set to 6 days that is 144 hours assuming your crunch 24/7. 144 divided by your 10 hour time setting is 14.4 WUs. The system should have given you 14 WUs but if it gave you 15, which is possible, then the system would have probably gone into EDF mode right off. But lets assume it gave you 14, and as you say they are all 12 day workunits. All things being equal that should not push the system into EDF mode in and of itself. But change any of those conditions and it could. And yes the crashed WUs would affect a change in the expected run time but it should make it shorter, which might cause you to get more than the 14 WUs and push you into EDF Mode. Please note that in this thread I am NOT commenting on "how many seconds of work were requested/downloaded". I __AM__ commenting on the following situation: - On Mar 12, enough WUs were downloaded to make up 144 or so hours of work for my system to do. On Mar 12, the system appeared to be processing normally (round-robin scheduling mode) the WUs then in its work queue. - On Mar 14, some of those WUs having finished (and *no* new WUs having been added), the system had about 100 hours of work to do -- but NOW (on Mar 14) it switched to EDF scheduling mode (for deadlines of Mar 26) -- though it could expect to finish the existing work in its queue by Mar 19 !! I thought this behavior worth posting about, since if I *were* to add another project, HOW the system behaved here was different from what I was expecting. <Even taking into account that Darren told me that I should in my mind *subtract* the "connect interval" value from the WU deadline!> . |

|

Darren Send message Joined: 6 Oct 05 Posts: 27 Credit: 43,535 RAC: 0 |

I *am* agreeing to what you've said -- but did you note my "minor correction"? Yes, I did, that's why I said "Since it occurred before that manual connection, it's something a bit more complex than that, though." But from the info that's available - nothing really more than the host info showing your work units download and report times - it will probably never be possible to figure out what triggered it. If you can go back far enough in your local logs to see it, there may be more of an indication there, but still not likely. It could have been any of a few different things. It could have been something so simple as the benchmarks running and getting a slightly lower benchmark than before, at which point the client will assume - based on those new benchmarks - that the work units will take longer. Remember that the boinc client doesn't know how rosetta works with the defined time, so if the benchmarks change the client will assume the run times will also change. After a couple more work units finish, the DCF will have adjusted and even with a new benchmark your estimated run times (as far as the boinc client is concerned) will be back to 10 hours, though as far as rosetta is concerned they will never have left 10 hours. If it's not happening every time you get new work, it's nothing to worry about and is probably going to be completely untraceable as to the cause. If it happens every time you get new work, then watching it closely to see what is changing as it goes into and out of EDF will help narrow it down.

|

Message boards :

Number crunching :

unnecessary earliest-deadline-first scheduling

©2025 University of Washington

https://www.bakerlab.org